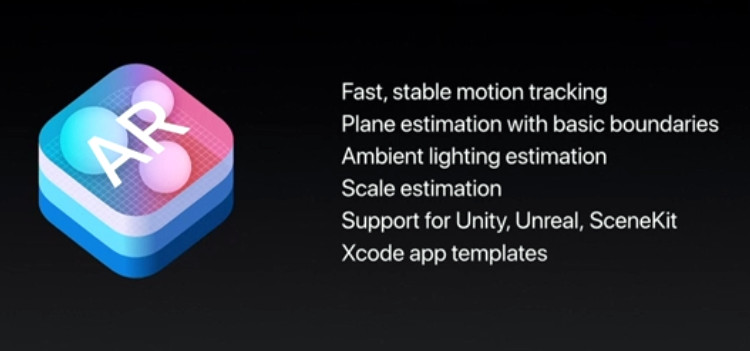

At the WWDC 2017 yesterday iOS 11 introduced ARKit, a new framework that allows developers to easily create augmented reality experiences for iPhone and iPad through apps. Apple said that ARKit takes apps beyond the screen, freeing them to interact with the real world in entirely new ways. This offers Fast, stable motion tracking, Plane estimation with basic boundaries, Ambient lighting estimation, Scale estimation and Xcode app templates.

Highlights of ARKit

- ARKit uses Visual Inertial Odometry (VIO) to accurately track the world around it. VIO fuses camera sensor data with CoreMotion data. These two inputs allow the device to sense how it moves within a room with a high degree of accuracy, and without any additional calibration.

- With ARKit, iPhone and iPad can analyze the scene presented by the camera view and find horizontal planes in the room. ARKit can detect horizontal planes like tables and floors, and can track and place objects on smaller feature points as well.

- ARKit also makes use of the camera sensor to estimate the total amount of light available in a scene and applies the correct amount of lighting to virtual objects.

-

ARKit runs on the Apple A9 and A10 processors. You can take advantage of the optimizations for ARKit in Metal, SceneKit, and third-party tools like Unity and Unreal Engine.

Peter Jackson’s Wingnut AR studio showed off a game at the WWDC 2017 that uses Apple’s ARKit and Unreal 4 offering a brilliant VR experience.

Developers can download iOS 11 beta and the latest beta of Xcode 9 from Apple developers website, which includes the iOS 11 SDK to build AR features in apps.