![]()

Pixel 2 has one of the best cameras on a smartphone and Google is using its AI to make the images look so good. In a blog post today, the company announced that it is open-sourcing a portion of this AI; a piece of software that underpins the Pixel’s portrait mode.

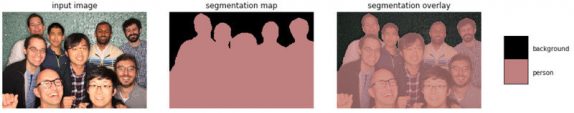

Google said that it is open-sourcing the semantic image segmentation model, DeepLab-v3+ which is an image segmentation tool built using convolutional neural networks. It is a machine learning method that shines with its abilities to analyzing visual data. Image segmentation analyzes objects within a picture and splits them apart thus creating a portrait with depth effect, dividing foreground elements from background elements.

Google uses this algorithm to power the portrait mode images on the Pixel. Portrait mode shots are becoming popular even among budget and mid-range segment phone especially since Apple introduced the feature back in 2016 on the iPhone 7+ and the same was later bought to Pixel 2 series. However, Google just uses a single camera sensor to achieve portrait shots.

Opening up the technology behind Google’s stunning single camera portraits, the company is offering a chance for developers to implement the split image segmentation for their products.