According to research, there are over 253 million blind or visually impaired people in the world and to make the world accessible to them; Google is developing a new Lookout app. This app will help visually impaired in becoming more independent by giving auditory cues as they encounter objects, text, and people around.

For this to work, Google recommends having Pixel device in a lanyard around your neck, or in your shirt pocket, with the camera pointing away from your body. Open the Lookout app, select the mode and the app will process the items of importance in your environment and shares information it believes to be relevant.

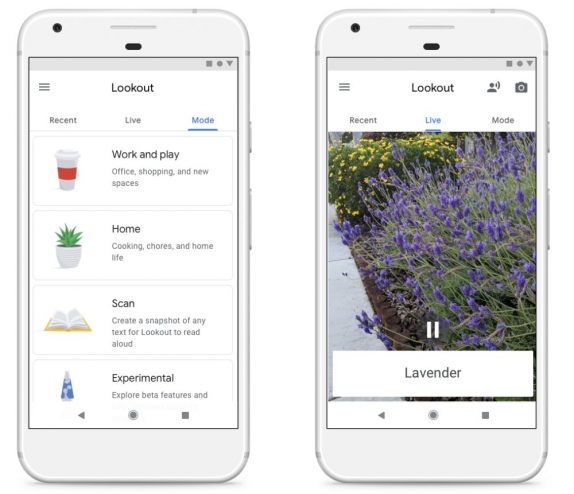

It can be text from a recipe book, an exit sign, a chair or a person nearby. Lookout delivers spoken notifications, designed to be used with minimal interaction allowing people to stay engaged with their activity. There are four modes to choose from within the app; Home, Work & Play, Scan or Experimental.

On selecting a specific mode, Lookout will deliver information that’s relevant to the selected activity. It also gives you an idea of where those objects are in relation to you. If you select “Work & Play” mode when heading into the office, it will notify you when you are next to an elevator, or stairwell. Like most of Google products, Lookout will also use machine learning to learn what people are interested in hearing about and will deliver these results more often.

All of the processing core is done on the phone so that you can use the app without internet as well.