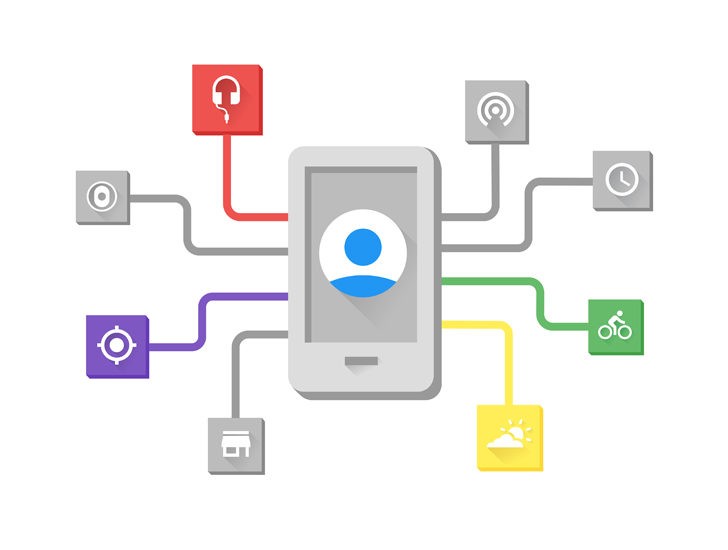

Google has launched Awareness API that will allow developers to make apps aware of their surroundings. Announced at I/O conference, the new framework helps apps with a basic understanding of your phone’s actual surroundings.

The API can support up to seven different signals at once, including time, location, places, beacons, headphones, activity, and weather, to deliver contextually aware information. Google says the API is able to process signals from these sources intelligently for accuracy and efficiency. With the API, the device should see improved battery life due to the conservation of resources. It lets developers create custom geofenced locations.

Google said in a blog post,

Your can combine these context signals to make inferences about the user’s current situation, and use this information to provide customized experiences (for example, suggesting a playlist when the user plugs in headphones and starts jogging).

The Awareness API is actually comprised of two separate interfaces – Fence API and Snapshot API. Former will let your app react to the user’s current situation, and provides notification when a combination of context conditions are met while latter. Developers can sign up now to get an early access preview of the Awareness API. Google didn’t say when the API will be made more widely available.