Samsung started mass production of industry’s first 4-gigabyte (GB) DRAM package based on the second-generation High Bandwidth Memory (HBM2) interface back in January last year. Later it unveiled 8-gigabyte (GB) DRAM which has double the capacity of 4GB HBM2. Today it has announced that it is ramping up production of 8GB HBM2 to meet the growing market across artificial intelligence, HPC (high-performance computing), advanced graphics, network systems and enterprise servers.

HBM2 boasts a 256GB/s data transmission bandwidth, offering more than an eight-fold increase over a 32GB/s GDDR5 DRAM chip. It is being used in AMD and NVIDIA graphics cards. AMD is using HBM2 in its Vega products and NVIDIA uses it in Tesla V100, the company’s first Volta-based GPU.

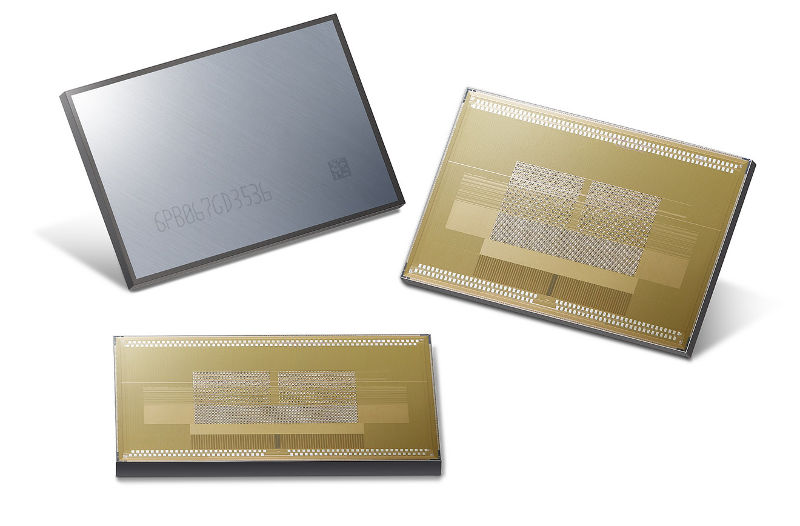

The 8GB HBM2 consists of eight 8-gigabit (Gb) HBM2 dies and a buffer die at the bottom of the stack, which are all vertically interconnected by TSVs and microbumps.

“With each die containing over 5,000 TSVs, a single Samsung 8GB HBM2 package has over 40,000 TSVs. The utilization of so many TSVs, including spares, ensures high performance, by enabling data paths to be switched to different TSVs when a delay in data transmission occurs,” said Samsung.

With the increase in production of 8GB HBM2, Samsung expects to cover more than 50% of its HBM2 production by the first half of next year.