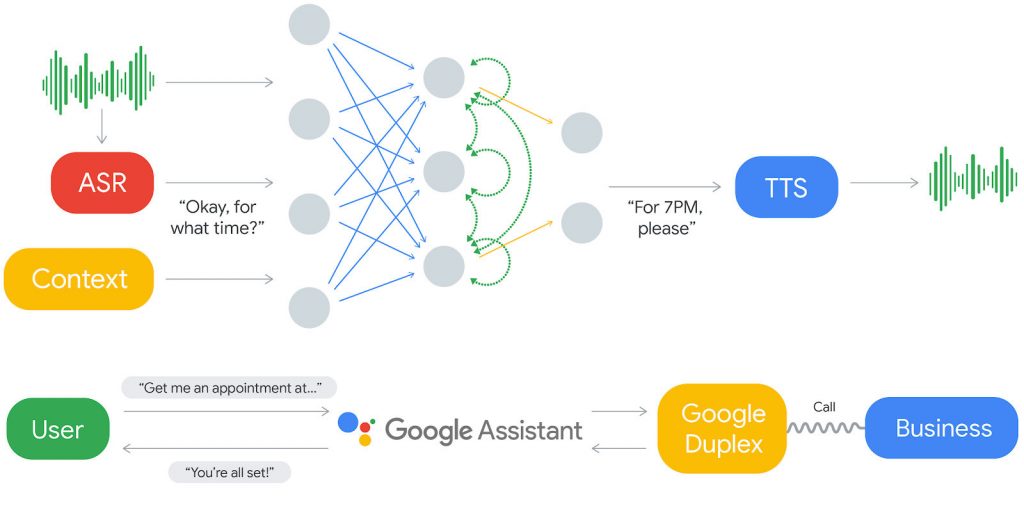

Google at the I/O 2018 event this week introduced one of the most controversial Assistant feature; Google Duplex which mimics itself as human and makes appointments. While the question arises on how ethical this feature is, Google just clarified in a statement that the experimental system will have “disclosure built-in.”

This means that whatever shape Duplex takes in the future, it will involve some kind of verbal announcement to the person on the other end that they are in fact talking to AI. Google Duplex is not a fully functional feature yet, Google just showcased a glimpse of the feature at the I/O event and said that it will start testing the feature in the coming days.

Google Assistant sounds much more lifelike thanks to Google’s partnership with of DeepMind’s new WaveNet audio-generation technique and other advances in natural language processing. At the demo the conversation sounded much more lifelike the voice is so convincingly with the use of phrases like“uh” and “um.” However, Google says that it doesn’t know how that disclosure would sound yet and it needs further testing is needed to see the reactions. Though Google didn’t state that it has all intentions to disclose beforehand on stage during the demo, it did clarify after the keynote.

Google in a statement said:

We understand and value the discussion around Google Duplex — as we’ve said from the beginning, transparency in the technology is important. We are designing this feature with disclosure built-in, and we’ll make sure the system is appropriately identified. What we showed at I/O was an early technology demo, and we look forward to incoporating feedback as we develop this into a product.