Facebook for the first time is publishing the number of Community Standards Enforcement Reports that it has got. This report covers the enforcement efforts between October 2017 to March 2018, touches areas like graphic violence, adult nudity and sexual activity, terrorist propaganda, hate speech, spam, and fake accounts.

Furthermore, it covers How much content people saw that violates the standards, how much content is removed, and how much content is detected proactively using Facebook’s technology before reporting. Facebook says that most of the action it takes to remove bad content is around spam and the fake accounts they use to distribute it.

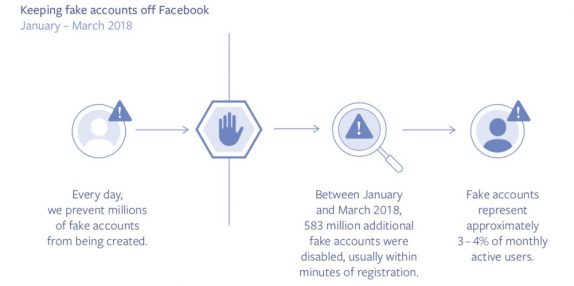

It took down 837 million posts that are spam in Q1 2018 which is nearly 100% of which we found and flagged before anyone reported it. The key to fight spam is taking down the fake accounts that spread it. Facebook deleted over 583 million fake accounts most of which were disabled within minutes of registration. It estimates that around 3 to 4% of the active Facebook accounts on the site during this period were still fake.

It also took down 21 million pieces of adult nudity and sexual activity, 3.5 million pieces of violent content in Q1 2018. Since Facebook technology can’t work all the time, it removed 2.5 million pieces of hate speech in Q1 2018 38% of which was flagged by its technology. By publishing these numbers, Facebook is aiming to increase transparency.