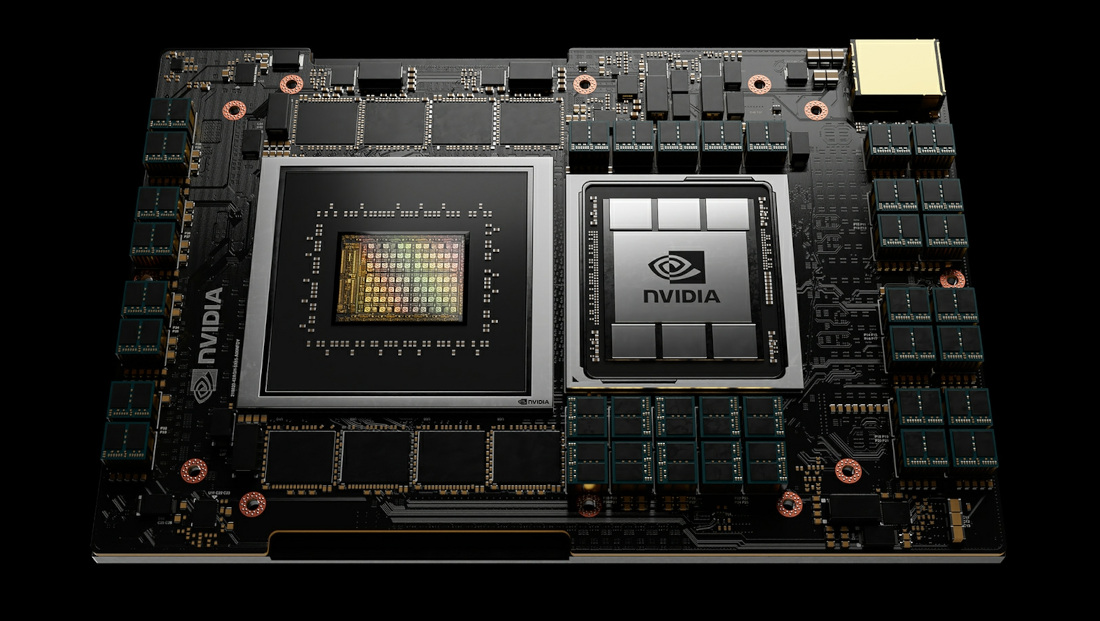

NVIDIA has unveiled their first data center-focused CPU called “Grace”, named after Grace Hopper, who was a pioneer in Computer-programming from the U.S. This ARM-based CPU/SoC is designed for large-scale neural network workloads and is expected to become available to customers sometime in 2023.

NVIDIA already leads the data-center market when it comes to GPUs, and now they are attempting to have more vertical integration with the launch of these ARM-based Grace CPUs.

These new CPU designs will utilize a future iteration of ARM’s Neoverse cores that may be based on Arm v9 architecture. For the CPU-GPU and CPU-CPU interlink, the NVIDIA Grace CPUs will support NVLink 4, enabling a higher bandwidth of up to 900GB/sec. The system will also make use of LPDDR5x memory subsystem with a supported bandwidth of 500GB/sec.

Some of the first customers to make use of NVIDIA’s Grace CPUs will be the Swiss National Supercomputing Centre (CSCS) and the U.S. Department of Energy’s Los Alamos National Laboratory.

Commenting on the launch, Jensen Huang, founder and CEO of NVIDIA said:

Leading-edge AI and data science are pushing today’s computer architecture beyond its limits – processing unthinkable amounts of data. Using licensed Arm IP, NVIDIA has designed Grace as a CPU specifically for giant-scale AI and HPC. Coupled with the GPU and DPU, Grace gives us the third foundational technology for computing, and the ability to re-architect the data center to advance AI. NVIDIA is now a three-chip company.