![]()

Google aims to develop the world’s most inclusive camera, and that takes a lot of user feedback. In October, Google launched the Pixel 7 and Pixel 7 Pro, the latest step in its work to design an inclusive and accessible camera that works for everyone.

Google upgraded Real Tone and continues to work on accessibility with Guided Frame, which uses audio cues, high-contrast animations, and haptic (tactile) feedback to enable blind and low-vision users to capture selfies and group shots. In a recent blog, Google disclosed the testing procedure for both Real Tone and Guided Frame, as follows:

How Google tested Real Tone

To improve Real Tone, Google said it worked with more people, including globally. The team wanted to examine how the Real Tone update performed around the world, so they expanded the testers.

The Real Tone team invited aesthetic experts to “break” the camera by taking photos in regions where the camera didn’t work for people with dark skin tones. “We wanted to understand what was and wasn’t functioning and relay that to our engineering team,” says Florian, Real Tone’s lead product manager.

Team members were asked to observe specialists edit images, which for photographers was a major request. For the best input, the company shared prototype Pixel phones so early that they often crashed. The Real Tone team member had access to special tools and methodologies, says Pixel Camera’s Main Product Manager.

After collecting this data, the team looked for “headline issues,” such as hair texture that wasn’t quite correct or skin colour that seeped from the air. Then they decided what to do in 2022 with expert guidance.

Then engineering and testing “Every time you modify one part of our camera, you must ensure it doesn’t affect another,” explains Florian. Thanks to relationships with worldwide image specialists, Real Tone works better in Night Sight, Portrait Mode, and other low-light scenarios.

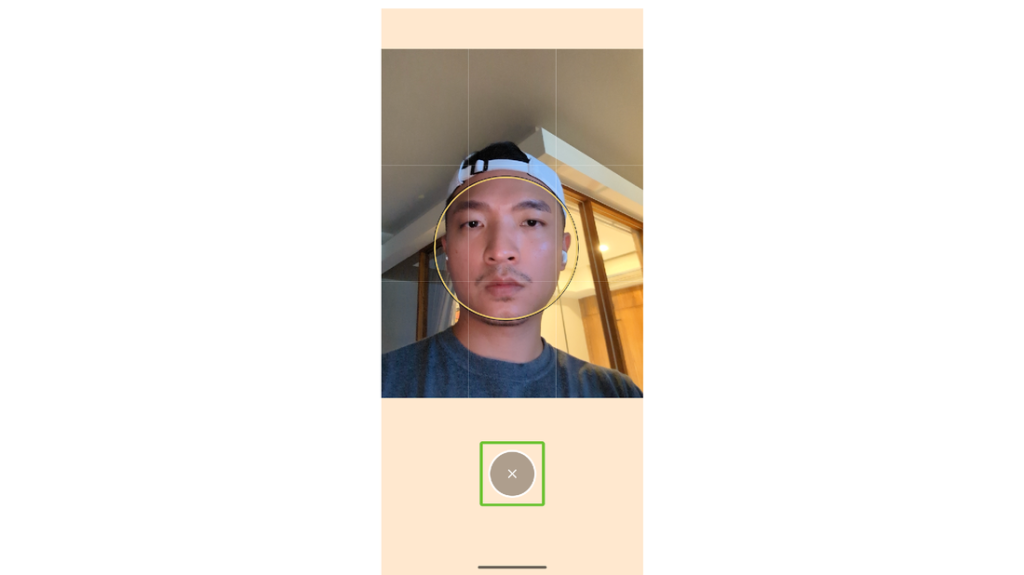

How Google tested Guided Frame

The idea for Guided Frame evolved after the Camera team invited Pixel’s Accessibility Department to an annual hackathon in 2021. Googler Lingeng Wang, a technical programme manager focused on product inclusion and accessibility, teamed with his colleagues Bingyang Xia and Paul Kim to come up with an innovative technique to make selfies easier for those who are blind or have low vision.

Google’s accessibility, engineering, haptics, and other departments collaborated to expand Guided Frame beyond a hackathon. First, teams closed their eyes and took selfies. Kevin Fu, product manager for Guided Frame, said the inadequate test showed sighted team members “we’re underserving these users.”

All the testing for this feature focused on blind and low-vision users. The team also consulted Google’s Central Accessibility Team and a group of blind and low-vision Googlers.

After making the first version, they asked blind and low-vision users to try it. Jabi Lue, a user experience designer, claims volunteers tested the first prototype. “They were asked about audio and haptics because using a camera is a real-time experience.”

In response to the comments, the team quickly tried numerous approaches. “Blind and low-vision testers helped us find and build the right combination of alternative sensory feedback,” adds Lingeng. They shared the final prototype with more volunteer Googlers.

This testing told the team that the camera should automatically take a photo when the face is in the center. Blind and low-vision individuals liked not having to find the shutter key. Voice guidance also helped selfie-takers achieve the optimal composition.

Speaking about the Real Tone feature testing, Florian Koenigsberger, Real Tone Lead Product Manager, said:

I feel like we’re starting to see the first meaningful pieces of progress of what we originally set out to do here. We want people to know their community had a major seat at the table to make this phone work for them.

About the Guided Frame, Victor Tsaran, a Material Design accessibility lead, said

It was one of the blind and low-vision Googlers who tested Guided Frame. Victor remembers being impressed by the prototype even then, but also noticed that it got better over time as the team heard and addressed his feedback. I was also happy that Google Camera was getting a cool accessibility feature of this quality.