Google has unveiled Gemma, a new series of open models designed to support developers and researchers in responsibly building AI systems.

Gemma, derived from the Latin word for “precious stone,” is a set of lightweight models inspired by Google’s Gemini models, developed by Google DeepMind and other teams across the company.

Key Features of Gemma

- Two Sizes: Gemma 2B and Gemma 7B come with pre-trained and instruction-tuned variants.

- Responsible AI Toolkit: Google offers tools supporting safer AI applications with Gemma.

- Framework Support: Inference and supervised fine-tuning (SFT) toolchains are provided for major frameworks like JAX, PyTorch, and TensorFlow.

- Easy Deployment: Gemma models can run on various platforms and integrate seamlessly with tools like Colab and Kaggle.

- Commercial Usage: Gemma permits responsible commercial usage and distribution for all organizations.

Performance and Responsible Design

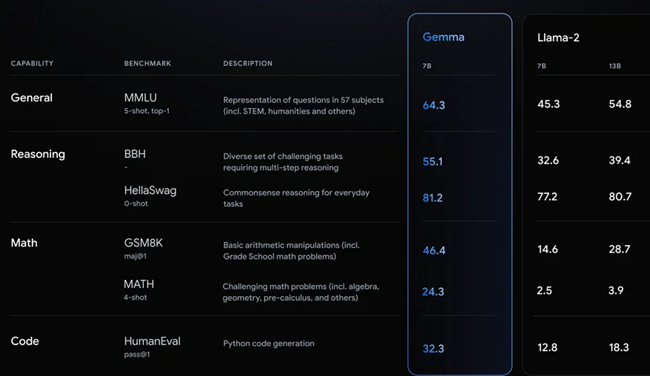

Gemma models, despite their lightweight design, offer top-tier performance compared to larger models. They can run directly on developer devices and surpass larger models on key benchmarks while ensuring safe and responsible outputs.

Google emphasizes responsible AI principles in Gemma’s design. Automated techniques are employed to filter sensitive data from training sets, and extensive evaluations ensure safe and reliable behavior.

A Responsible Generative AI Toolkit is also provided to assist developers in prioritizing safety. The toolkit offers:

- Safety classification: A new approach by Google to construct strong safety classifiers with minimal examples.

- Debugging: Tool for examining Gemma’s behavior and resolving potential problems.

- Guidance: Access to best practices derived from Google’s experience in building and deploying large language models.

Optimization Across Frameworks and Hardware

Gemma supports fine-tuning on various data and applications, with compatibility across multiple frameworks and devices. It’s optimized for Google Cloud deployment, offering flexibility and efficiency across different hardware infrastructures.

Free Credits for Research and Development

To encourage community engagement, Google offers free access to Gemma through platforms like Kaggle and Colab, along with credits for first-time Google Cloud users and researchers.

Collaboration with NVIDIA

NVIDIA has collaborated with Google to optimize Gemma for their AI platforms, enhancing performance and reducing costs for developers. This collaboration extends to tools like TensorRT-LLM and deployment on NVIDIA GPUs.

Soon, Gemma will be supported in Chat with RTX, a tech demo by NVIDIA enabling generative AI capabilities on local, RTX-powered Windows PCs. This allows users to personalize chatbots with their data while ensuring privacy and fast processing.

Availability

Gemma is now available worldwide, with resources and quick start guides accessible on ai.google.dev/gemma. Users can also experience Gemma models directly through the NVIDIA AI Playground.

Speaking about the launch, Tris Warkentin, Director, Google DeepMind, said:

As we further develop the Gemma model family, we anticipate introducing additional variants tailored for various applications. Keep an eye out for upcoming events and opportunities in the coming weeks to engage, learn, and innovate with Gemma.