At its Google I/O 2024 event, Google unveiled several updates and additions to its offerings, focusing on enhancing user experiences and providing new tools for developers. Here’s a breakdown of what was announced:

Gemini 1.5 Pro and 1.5 Flash

Gemini 1.5 Pro received significant quality improvements across various tasks like translation and coding. These enhancements aim to empower users to handle broader and more complex tasks effectively.

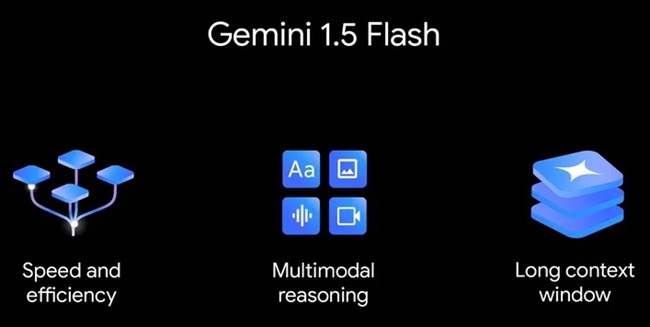

Meanwhile, the introduction of Gemini 1.5 Flash caters to tasks that require quick responses, thanks to its optimized design. Both models support natively multimodal capabilities, allowing users to incorporate text, images, audio, and video seamlessly.

While the standard version offers a 1 million token context window, users can access the 2 million token window by joining the waitlist on Google AI Studio or Vertex AI for Google Cloud customers.

Availability: Preview versions of both models are accessible today in over 200 countries and territories, with general availability slated for June.

New Developer Features

In response to user feedback, Google is introducing two new features to the Gemini API: video frame extraction and parallel function calling, enabling more efficient processing of tasks.

In June, Google plans to implement context caching for Gemini 1.5 Pro, reducing the need to resend prompts and large files to the model repeatedly, thus improving efficiency and affordability.

Pricing: While access to the Gemini API remains free in eligible regions through Google AI Studio, Google is expanding its pay-as-you-go service, increasing rate limits to accommodate more usage.

Additions to the Gemma Family

PaliGemma, Google’s inaugural vision-language open model, debuts, optimized for tasks like image captioning and visual Q&A, joining existing Gemma variants CodeGemma and RecurrentGemma.

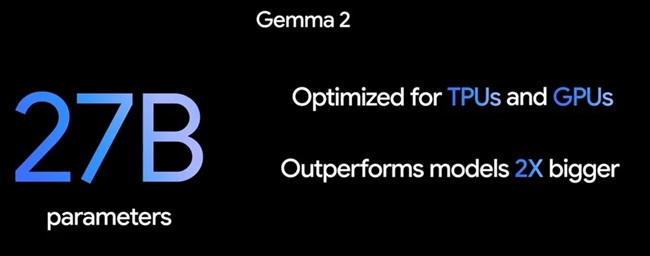

- Gemma 2, set to launch in June, represents the next generation of Gemma models, offering industry-leading performance in developer-friendly sizes.

- The Gemma 27B model, specifically designed to balance size and performance, surpasses larger models’ efficiency while remaining compatible with GPUs and TPUs.

Gemini API Developer Competition

Google introduced its inaugural Gemini API Developer Competition, encouraging developers to showcase their creativity. Submission deadline is August 12, with a grand prize of a custom electric DeLorean.

Updates to Gemini 1.5 Pro

At Google I/O 2024, Google introduced several updates to Gemini 1.5 Pro, including a longer context window, enhanced data analysis capabilities, and better integration with Google apps.

Analyze Documents with a Long Context Window

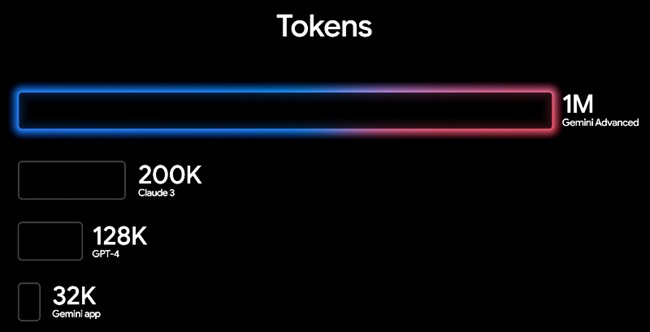

Gemini 1.5 Pro, part of Gemini Advanced, now supports a context window of 1 million tokens, the longest for any consumer chatbot.

This allows it to process large documents up to 1,500 pages or summarize 100 emails. It will soon handle an hour of video or codebases with over 30,000 lines.

Users can upload files via Google Drive or directly to Gemini Advanced, making it easier to extract insights from dense documents like rental agreements or research papers.

Additionally, Gemini will soon analyze data files, creating custom visualizations from spreadsheets. All files remain private and are not used for model training.

Gemini 1.5 Pro also improves image understanding. Users can take photos of dishes to get recipes or snap math problems for step-by-step solutions.

Availability: Gemini 1.5 Pro will be available to Gemini Advanced subscribers in over 150 countries and 35 languages.

Natural Conversations with Gemini Live

Gemini Live enhances interaction by allowing users to chat via text or voice. In Google Messages, users can chat with Gemini directly. With Gemini Live, users can choose from various natural-sounding voices, speak at their own pace, and interrupt for clarifications.

For example, users can prepare for job interviews by rehearsing with Gemini, which can suggest skills to highlight. Later this year, users will be able to use their camera during Live sessions for more interactive conversations.

Availability: Gemini Live will roll out to Gemini Advanced subscribers in the coming months.

Simplified Trip Planning

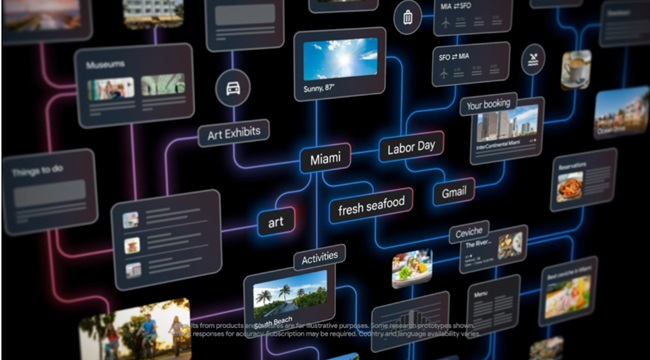

Gemini Advanced now offers a planning feature that creates custom itineraries. Users can ask Gemini to plan trips by pulling flight and hotel information from Gmail, considering meal preferences, and suggesting local activities.

For example, a prompt for a Miami trip could generate a schedule including flight details, restaurant recommendations, museum visits, and more. The itinerary updates automatically with any changes or additional details.

Availability: This planning feature will be available to Gemini Advanced subscribers soon.

Personalize Gemini with Gems

Gemini Advanced subscribers will soon be able to create Gems, customized versions of Gemini for specific tasks like being a gym buddy, cooking assistant, coding partner, or writing guide. Users describe the desired role and behavior, and Gemini creates the Gem accordingly.

Integration with More Google Apps

Gemini will connect with more Google tools, including Google Calendar, Tasks, and Keep. This allows users to automate tasks such as creating calendar entries from school syllabi photos or converting recipe photos into shopping lists.

Gemini Model Updates

- 1.5 Flash, optimized for speed and efficiency, was introduced. It’s lighter than 1.5 Pro but excels in tasks like summarization, chat applications, and data extraction from documents.

- Significant improvements were made to 1.5 Pro, enhancing its performance across various tasks such as code generation, logical reasoning, and audio understanding.

- Gemini Nano now understands multimodal inputs, including text, images, sound, and spoken language.

Gemma Family Updates

- Gemma 2: Google introduced Gemma 2, the next generation of open models designed for breakthrough performance and efficiency, available in new sizes.

- PaliGemma: The Gemma family expands with PaliGemma, the first vision-language model inspired by PaLI-3.

- Responsible Generative AI Toolkit: Upgraded with LLM Comparator for evaluating model responses’ quality.

Progress with Project Astra

Google shared progress on Project Astra, aimed at developing universal AI agents for everyday assistance.

These agents aim to understand and respond to the world like humans, being proactive, teachable, and personal. Google has been working on improving perception, reasoning, and conversation to enhance interaction quality and pace.