OpenAI has announced the availability of fine-tuning for GPT-4o, enabling developers to create customized versions of the model to enhance performance and precision in their applications.

Fine-Tuning with Custom Data

Developers can now fine-tune GPT-4o using their own datasets. This process allows the model to be tailored to specific needs, optimizing both the structure and tone of responses. Significant performance improvements can be achieved even with just a few dozen examples, said the company.

Whether for coding or creative writing, fine-tuning can significantly enhance the model’s performance across various tasks. OpenAI plans to continue expanding customization options for developers.

Performance Achievements

In recent months, OpenAI collaborated with select partners to test GPT-4o fine-tuning, leading to notable outcomes:

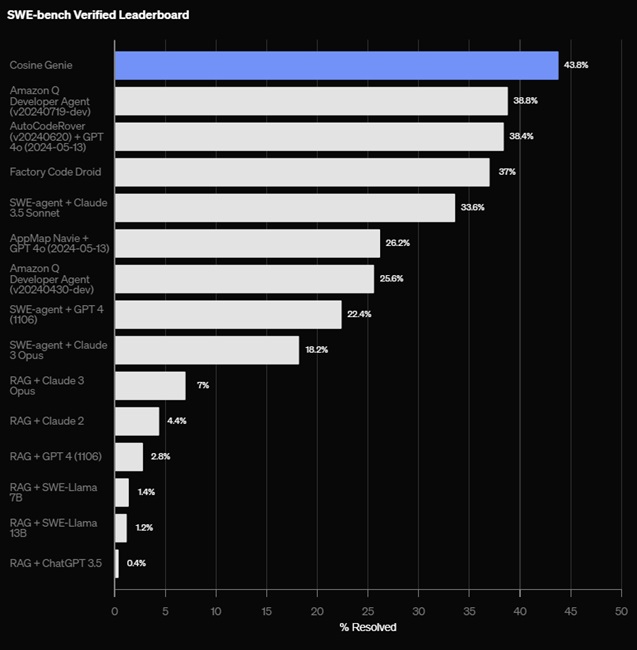

Cosine’s Success: Cosine’s AI assistant, Genie, employs a fine-tuned GPT-4o model to autonomously handle software engineering tasks, such as bug fixes and code refactoring.

Trained on real-world examples, Genie achieved a state-of-the-art (SOTA) score of 43.8% on the SWE-bench Verified benchmark and 30.08% on SWE-bench Full, a significant improvement from its previous score of 19.27%.

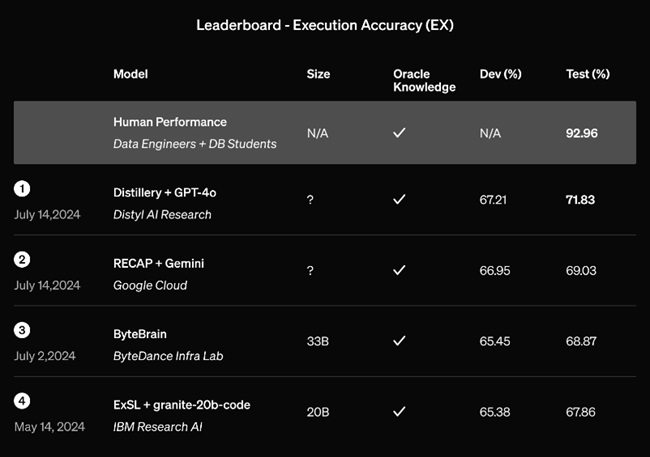

Distyl’s Achievement: Distyl, an AI partner for Fortune 500 companies, secured the top spot on the BIRD-SQL benchmark, a leading test for text-to-SQL conversion.

Their fine-tuned GPT-4o model achieved a 71.83% execution accuracy, excelling in tasks like query reformulation, intent classification, and SQL generation.

Data Control and Safety

OpenAI ensures that fine-tuned models remain under developers’ full control, with complete ownership of all business data. No data is shared or used to train other models.

OpenAI has also implemented multiple safety measures to prevent misuse, including automated safety evaluations and monitoring to ensure compliance with usage policies.

Pricing and Availability

Developers can start fine-tuning by accessing the fine-tuning dashboard, selecting “gpt-4o-2024-08-06” from the base model options.

- The cost for GPT-4o fine-tuning is $25 per million tokens for training, with inference costs of $3.75 per million input tokens and $15 per million output tokens.

For those interested in GPT-4o mini fine-tuning, it is available to all developers on paid tiers.

- By selecting “gpt-4o-mini-2024-07-18” from the base model options on the dashboard, developers can fine-tune with up to 2 million training tokens per day for free until September 23, 2024.