OpenAI on Thursday announced the launch of OpenAI o1, a new series of large language models designed to enhance reasoning capabilities through reinforcement learning.

The model, part of a preview release, focuses on improving complex reasoning by producing a “chain of thought” before responding. This approach aims to make the model more capable in areas such as science, math, and coding compared to previous models, according to the company.

OpenAI o1: How It Works

OpenAI’s latest model series, OpenAI o1, enhances complex problem-solving by using reinforcement learning to refine responses and correct errors.

In tests, OpenAI o1 matches the performance of human PhD students in physics, chemistry, and biology and excels in mathematics and programming.

While it advances AI capabilities for reasoning tasks, it does not include features like web browsing or file and image uploads found in earlier models.

Key Performances of OpenAI o1

Math and Science Proficiency

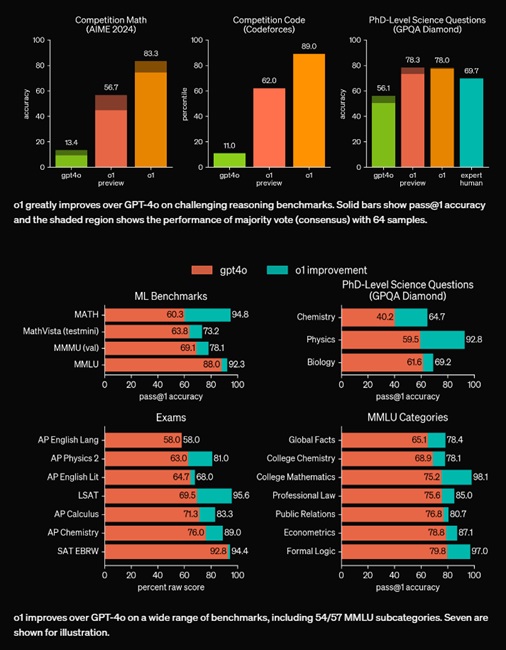

- Outperforms GPT-4o on most reasoning-heavy tasks.

- Achieves a 74% score on the AIME math exam with a single sample, 83% with consensus from 64 samples, and 93% with re-ranking using a learned scoring function.

- Surpassed human PhD-level performance on the GPQA diamond benchmark in physics, chemistry, and biology.

Competitive Programming

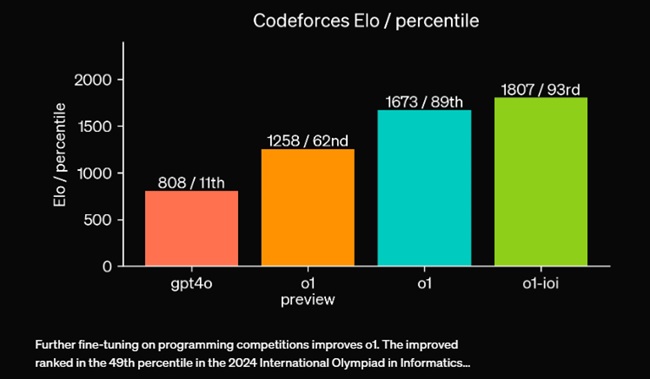

- Scored 213 points, ranking in the 49th percentile in the 2024 International Olympiad in Informatics (IOI).

- Demonstrated improved performance with strategic test-time submissions, achieving a gold medal threshold score of 362.14 with relaxed constraints.

- Achieved an Elo rating of 1807, outperforming 93% of competitors on Codeforces.

Reinforcement Learning and Chain of Thought

- Uses a ‘chain of thought’ approach to break down complex problems into simpler steps, enhancing reasoning capabilities.

- Consistently improves with more training time and focused thought processes.

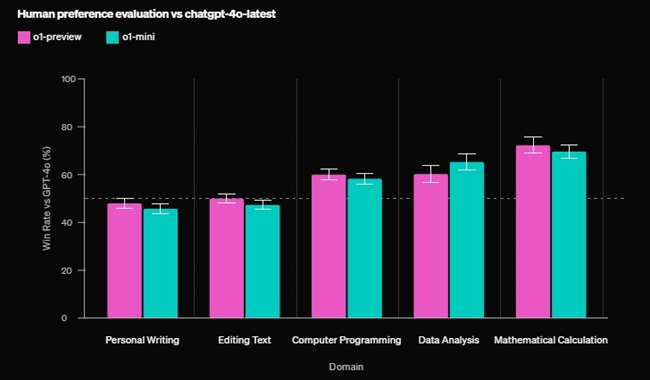

Human Preference Evaluation

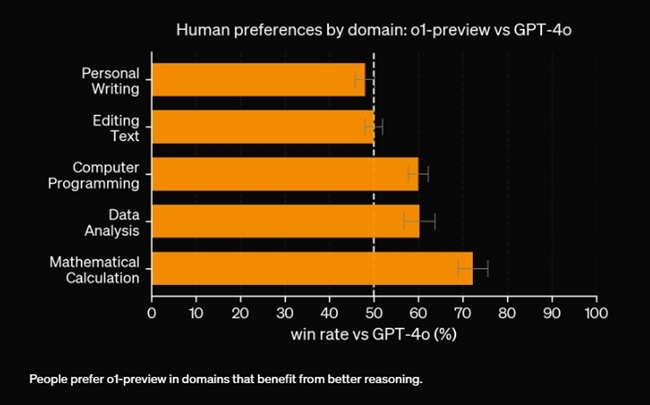

- Preferred over GPT-4o by human evaluators in reasoning-heavy tasks such as data analysis, coding, and math.

- Less preferred in some natural language tasks, indicating it may not be suitable for all applications.

OpenAI continues to refine the model to make it as user-friendly as previous versions while maintaining high performance in reasoning tasks.

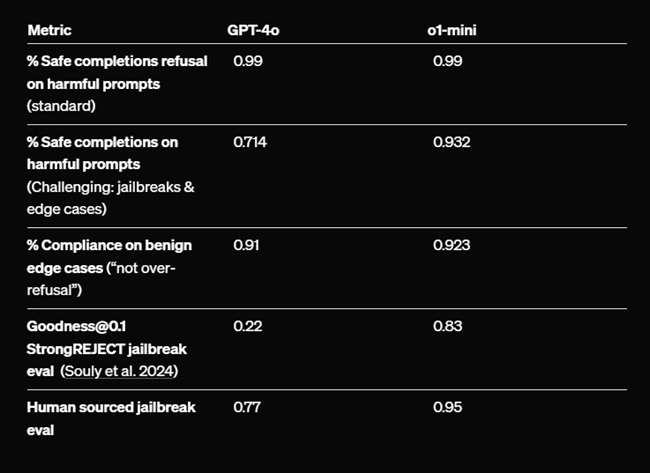

Safety

OpenAI has improved model safety and alignment by incorporating chain of thought reasoning, embedding safety rules directly into the model’s reasoning process. The o1-preview model has achieved a score of 84 out of 100 on challenging jailbreak tests, significantly higher than GPT-4o’s 22.

This enhancement is supported by comprehensive testing, red-teaming, and collaboration with U.S. and U.K. AI Safety Institutes, with results detailed in the System Card.

Hidden Chain of Thought

While OpenAI has developed a method to monitor the model’s reasoning internally, they have opted not to display the raw chain of thought to users. Instead, users will see a model-generated summary of the chain of thought. This approach balances the need for transparency with user experience and competitive considerations.

Target Users for OpenAI o1

The OpenAI o1 models are designed for complex problem-solving in areas such as science, coding, and math. Potential applications include annotating cell sequencing data, generating mathematical formulas for quantum optics, and executing multi-step workflows in development.

OpenAI o1-mini

Alongside OpenAI o1, the company also unveiled o1-mini, a cost-efficient reasoning model designed to excel in STEM fields. While it closely matches the performance of OpenAI o1 on benchmarks such as AIME and Codeforces, o1-mini aims to offer a faster and more affordable option for applications requiring focused reasoning without extensive world knowledge.

Optimized for STEM Reasoning

Large language models like o1 are pre-trained on extensive text datasets, providing broad world knowledge but often being costly and slow for specific applications.

In contrast, o1-mini is optimized for STEM reasoning, having undergone training with a high-compute reinforcement learning (RL) pipeline similar to o1. This optimization allows o1-mini to perform effectively on various reasoning tasks while being more cost-efficient.

Performance Highlights

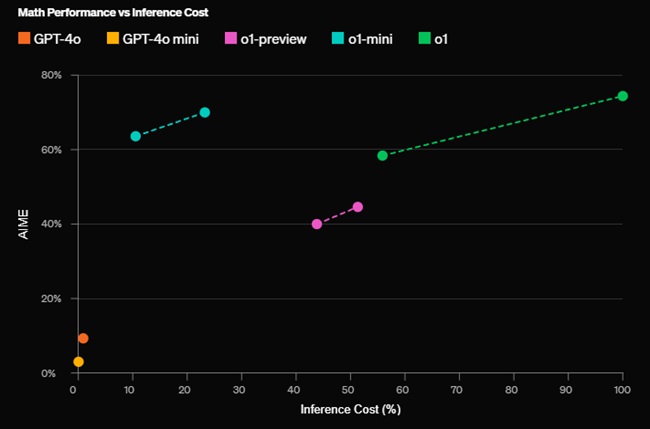

- Mathematics: o1-mini scored 70.0% in the AIME math competition, close to o1’s 74.4% and better than o1-preview’s 44.6%, positioning it among the top 500 U.S. high school students.

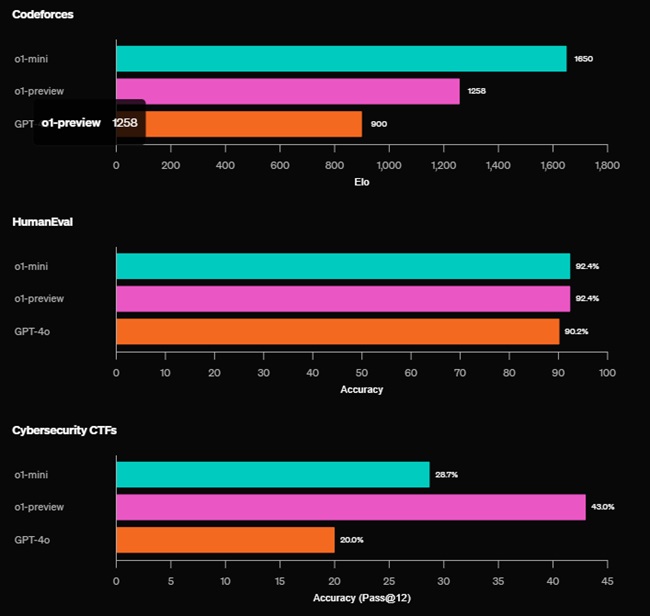

- Coding: On Codeforces, o1-mini achieved a 1650 Elo rating, comparable to o1’s 1673 and higher than o1-preview’s 1258, placing it in the 86th percentile. It also excels in HumanEval coding and high-school cybersecurity challenges.

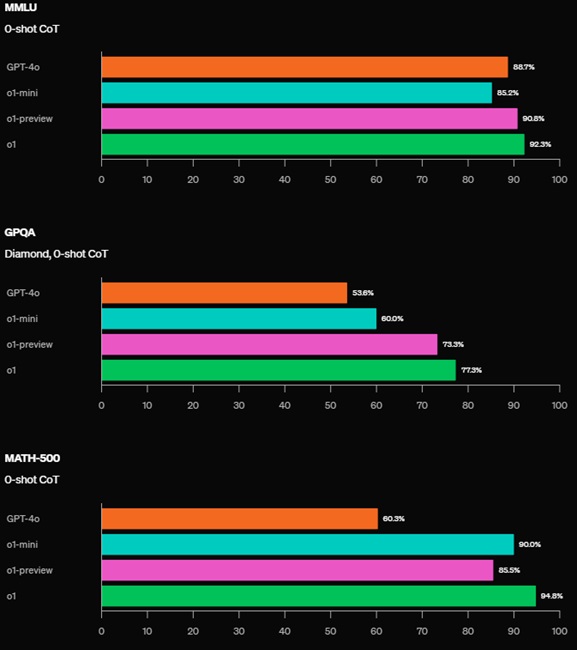

- STEM Benchmarks: o1-mini surpasses GPT-4o on some reasoning benchmarks like GPQA and MATH-500 but falls behind in others like MMLU and o1-preview on GPQA due to narrower world knowledge.

Human Preference Evaluation

Human raters found o1-mini preferable to GPT-4o in reasoning-heavy domains, but less favored in language-focused areas. For tasks requiring complex reasoning, o1-mini often reaches conclusions 3-5 times faster than GPT-4o.

Safety

o1-mini uses the same alignment and safety methods as o1-preview, demonstrating 59% greater jailbreak resilience on the StrongREJECT dataset compared to GPT-4o.

OpenAI has assessed the safety of o1-mini with rigorous evaluations and will release detailed results in the system card.

Limitations and Future Developments

While o1-mini excels in STEM reasoning, its factual knowledge in non-STEM areas is limited compared to larger models like GPT-4o. OpenAI plans to address these limitations in future updates and explore extending the model to other domains.

Availability

- ChatGPT Plus and Team Users: Access both o1-preview and o1-mini models starting today, with initial weekly message limits of 30 for o1-preview and 50 for o1-mini.

- ChatGPT Enterprise and Edu Users: Access will be available next week.

- API Users (Tier 5): Can begin using both models today with a rate limit of 20 RPM. The API currently lacks some features, including function calling and streaming, but these will be added in the future.

- ChatGPT Free Users: Plans are in place to provide access to o1-mini.

- o1-mini: Initially, o1-mini will be available to Tier 5 API users at a cost 80% lower than o1-preview. It will also be accessible to ChatGPT Plus, Team, Enterprise, and Edu users as a more cost-effective alternative to o1-preview, offering higher rate limits and reduced latency.

This early preview of the o1 models is available in ChatGPT and the API. Future updates will include browsing, file and image uploading, and further model developments.