Intel on Tuesday introduced its Xeon 6 processors, featuring Performance-cores (P-cores) and Gaudi 3 AI accelerators. This launch enhances Intel’s efforts to provide powerful AI systems with improved performance per watt and reduced total cost of ownership (TCO).

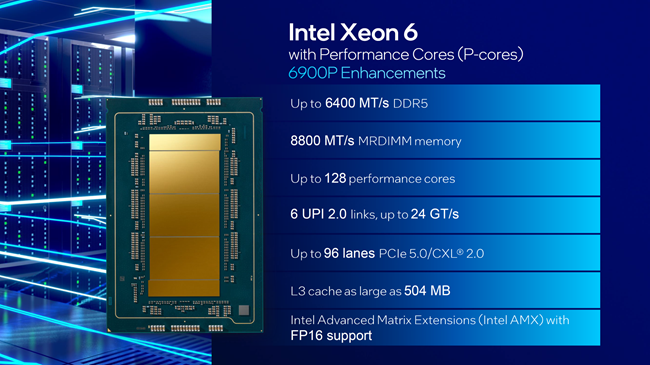

Xeon 6900P “Granite Rapids” Overview

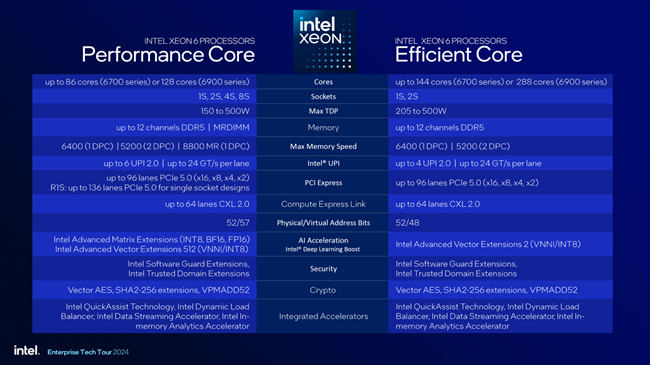

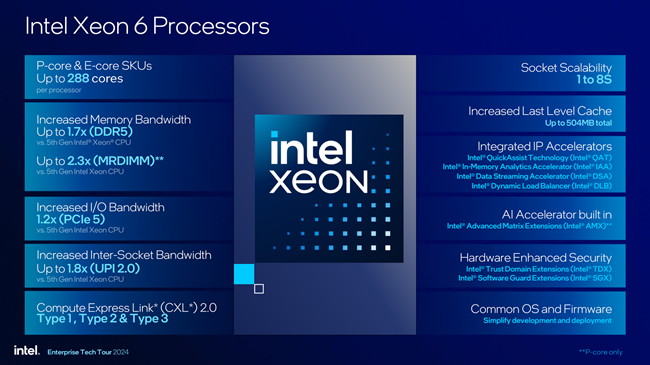

The Xeon 6900P “Granite Rapids” P-Core CPUs offer up to 128 cores, competing effectively against AMD’s EPYC lineup. This follows the earlier release of the Xeon 6700E series in June, which has up to 144 cores, with a planned upgrade to 288 cores in early 2025.

Key Features of Xeon 6900P:

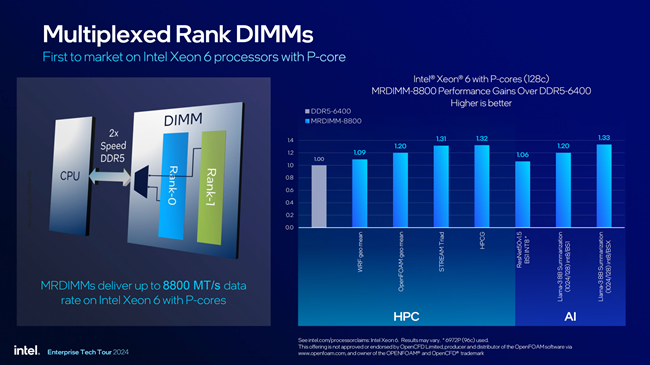

- Up to 6400 MT/s DDR5 support

- Up to 8800 MT/s MRDIMM support

- Up to 128 performance cores

- 6 UPI 2.0 links at up to 24 GT/s

- Up to 96 PCIe 5.0/CXL 2.0 lanes

- Up to 504 MB L3 Cache

- Intel Advanced Matrix Extensions (AMX) with FP16 support

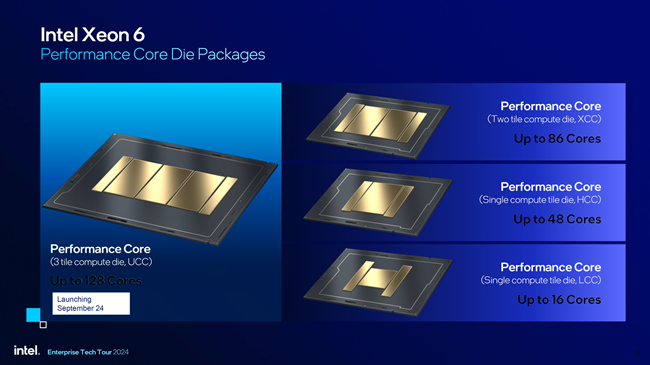

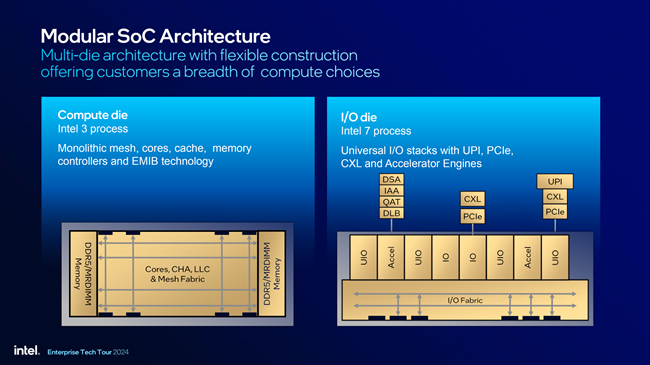

CPU Configuration

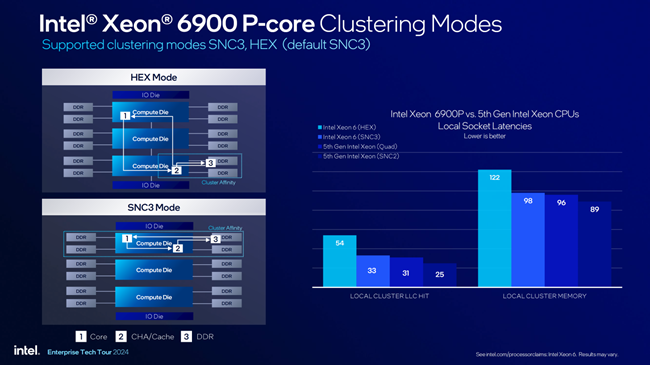

The Xeon 6900 series utilizes a chiplet design, allowing up to five chiplets for the P-Core CPUs. The Compute Die is built on the “Intel 3” process node, featuring Redwood Cove P-Cores and Integrated Memory Controller (IMC). The I/O die, based on the “Intel 7” process node, includes various I/O controllers and accelerator engines.

Xeon 6900P configurations include:

- XCC SKU: 3 Compute Tiles + 2 IO Tiles = Up to 128 Cores

- 6700P (XCC SKU): 2 Compute Tiles + 2 IO Tiles = Up to 86 Cores

- 6500P (HCC SKU): 1 Compute Tile + 2 IO Tiles = Up to 48 Cores

- 6300P (LCC SKU): 1 Compute Tile + 2 IO Tiles = Up to 16 Cores

- 6900E (XCC SKU): 2 Compute Tiles + 2 IO Tiles = Up to 288 Cores

- 6700E (HCC SKU): 1 Compute Tile + 2 IO Tiles = Up to 144 Cores

Platforms and Support

Intel’s higher-end Xeon 6900 “Granite Rapids” CPUs will support the LGA 7529 socket platform (Birch Stream), enabling 1S/2S configurations with up to 500W TDP per CPU. Key specifications include:

- 12 memory channels supporting DDR5-6400/MCR-8800 MT/s speeds

- Up to 96 PCIe Gen 5.0/CXL 2.0 lanes

- 6 UPI 2.0 links at up to 24 GT/s

Performance Metrics

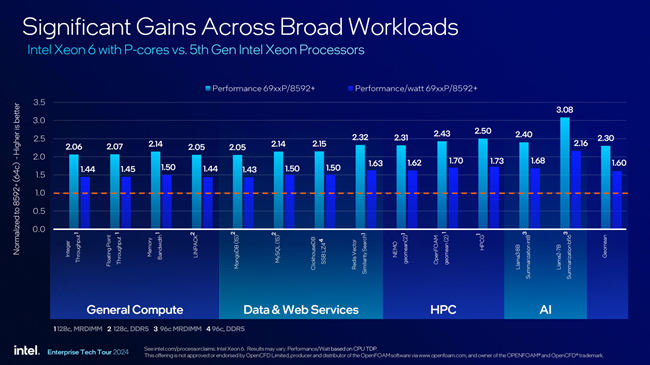

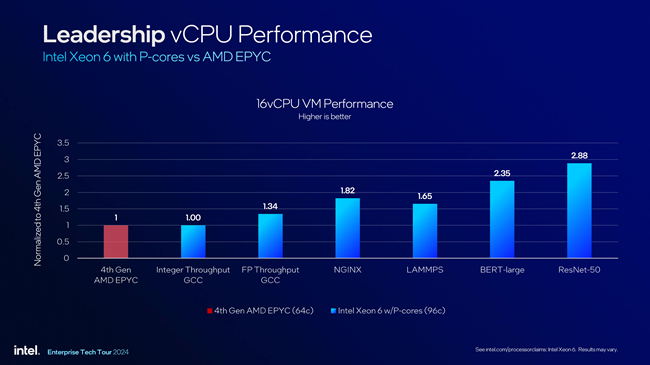

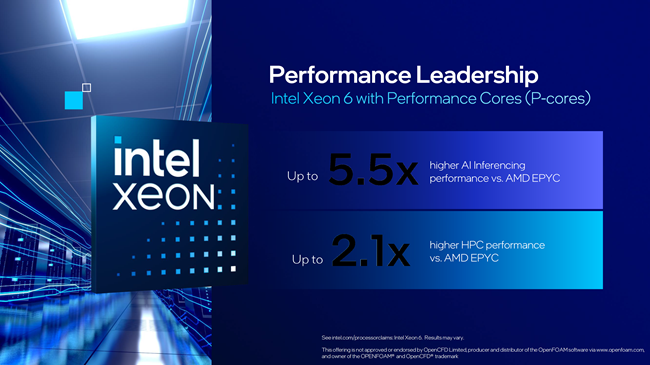

Intel claims the Xeon 6900P delivers significant performance improvements over AMD’s EPYC CPUs.

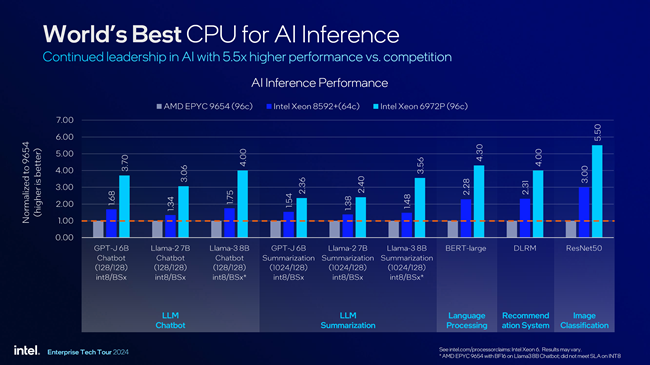

- For instance, it reports up to 5.5 times higher AI inference performance and 2.1 times higher HPC performance compared to AMD’s offerings.

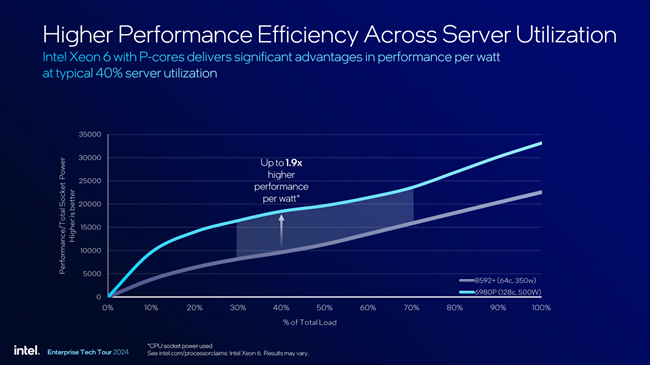

- Compared to the 5th Gen Emerald Rapids CPUs, the Xeon 6900P shows a 2.28 times performance increase and 60% efficiency improvement.

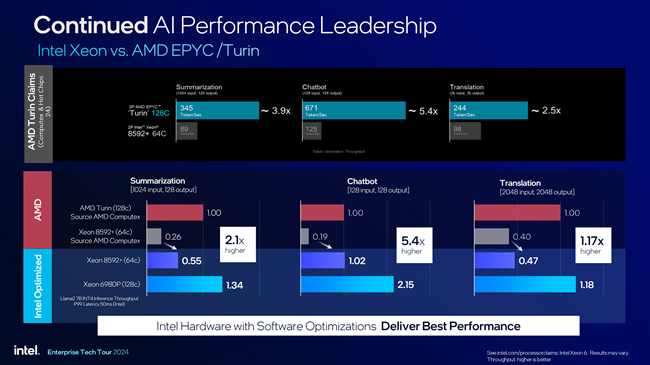

Intel’s Xeon 6900P offers a 60% improvement in performance per watt and reduces total cost of ownership (TCO) by 30%. In specific benchmarks, it shows a 34% boost in Summarization, 2.15x improvement in Chatbot performance, and an 18% increase in Translation tasks.

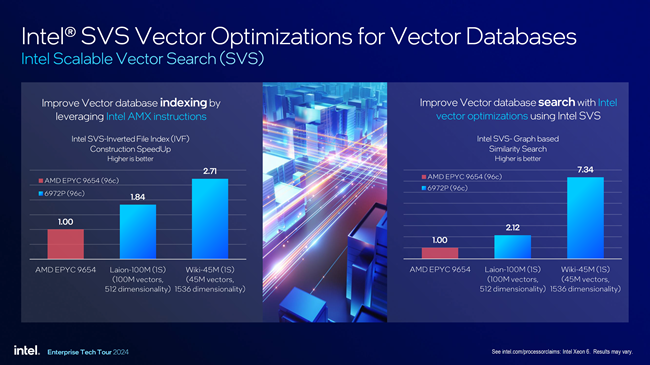

Compared to AMD’s EPYC Genoa and upcoming Turin chips, the Xeon 6900P delivers a 3.65x average gain in AI performance and excels in vector database and scalable vector search workloads.

CPU Lineup

Intel launched five SKUs in the Xeon 6900P series:

- Xeon 6980P: 128 cores, 2.0 GHz base, 3.9 GHz boost, 504 MB L3 cache, 500W TDP

- Xeon 6979P: 120 cores

- Xeon 6972P: 96 cores, 2.1 GHz base, 3.9 GHz boost, 480 MB L3 cache, 400W TDP

- Xeon 6952P: 96 cores, 2.1 GHz base, 3.9 GHz boost, 400W TDP

- Xeon 6960P: 72 cores, 2.7 GHz base, 3.9 GHz boost

Overall, the Intel Xeon 6900P lineup represents a strong comeback for Intel in the server market, aiming to compete closely with AMD’s upcoming Turin CPUs.

Gaudi 3 AI Accelerator: Optimized for Generative AI

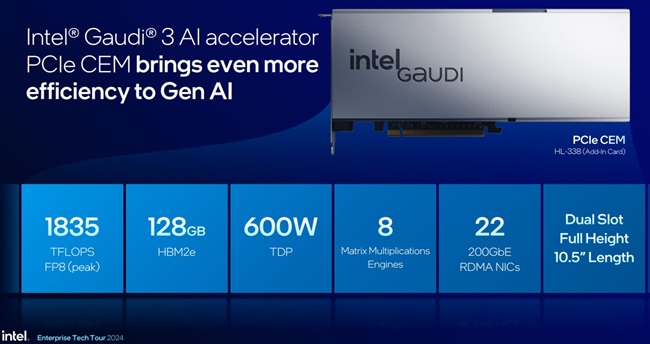

Intel announced the general availability of Gaudi 3 AI Accelerator, designed for large-scale generative AI. With 64 Tensor processor cores (TPCs) and eight matrix multiplication engines (MMEs), it enhances deep neural network computations.

It features 128GB of HBM2e memory and 24 200Gb Ethernet ports for scalability and performance, while being fully compatible with PyTorch and Hugging Face models.

Collaboration with IBM to Lower TCO

Intel and IBM have partnered to deploy Gaudi 3 AI accelerators on IBM Cloud. This collaboration aims to reduce the total cost of ownership (TCO) for enterprises and improve AI scalability and performance.

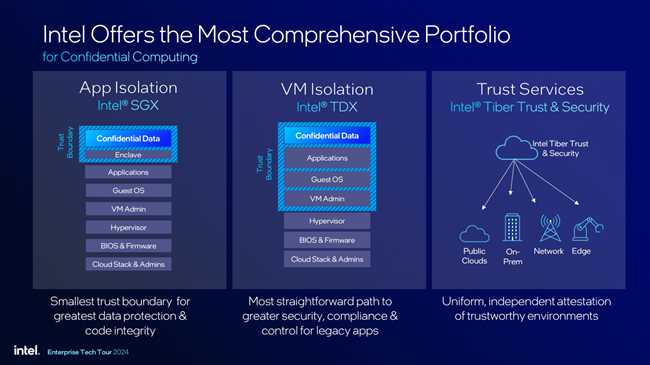

Co-Engineering AI Solutions with Dell Technologies

Intel is working with Dell Technologies to co-engineer AI solutions, focusing on moving generative AI prototypes into production. Dell’s retrieval-augmented generation (RAG) systems leverage Intel’s technologies, addressing scalability and security challenges.

Early Access to AI Systems through Tiber Developer Cloud

Intel’s Tiber Developer Cloud offers early access to Xeon 6 and Gaudi 3 systems for developers to test AI workloads. Gaudi 3 clusters will be available for large-scale deployments next quarter, alongside Intel’s SeekrFlow platform, which supports AI applications. Updated AI tools, including Intel Gaudi software and PyTorch 2.4, offer enhanced acceleration.

Intel’s Commitment to Scalable, Flexible AI Solutions

Intel continues to lead in AI innovation through partnerships and advanced technology offerings, delivering flexible, scalable, and cost-effective AI solutions for enterprises.

Speaking about the launch, Justin Hotard, Intel Executive Vice President and General Manager of the Data Center and Artificial Intelligence Group, said:

The demand for AI is driving a significant transformation in data centers, and the industry is looking for more options in hardware, software, and developer tools.

With the introduction of Xeon 6 featuring P-cores and Gaudi 3 AI accelerators, Intel is fostering an open ecosystem that empowers our customers to execute their workloads with enhanced performance, efficiency, and security.