The AI scene is getting an incessant buzz from the news cycle but this time for totally different reasons altogether. The usual suspect is of course Open AI. Augmented reality seems to have come to the fore, as is the desire from AI companies to jump into hardware. Meta’s releases are no joke, this week has been really big for Mark Zuckerberg. In fact it’s so important that some people call it Meta’s “iPhone moment”. India is making very significant steps towards supercomputer independence and at the same time making news in the EV field for an electric superbike. The Chinese are racing ahead in the field of robotics. It’s a spicy, juicy week at the edge of tomorrow in the world of technology, let’s dive in.

The AI scene is getting an incessant buzz from the news cycle but this time for totally different reasons altogether. The usual suspect is of course Open AI. Augmented reality seems to have come to the fore, as is the desire from AI companies to jump into hardware. Meta’s releases are no joke, this week has been really big for Mark Zuckerberg. In fact it’s so important that some people call it Meta’s “iPhone moment”. India is making very significant steps towards supercomputer independence and at the same time making news in the EV field for an electric superbike. The Chinese are racing ahead in the field of robotics. It’s a spicy, juicy week at the edge of tomorrow in the world of technology, let’s dive in.

This article is brought to you in partnership with Truetalks Community by Truecaller, a dynamic, interactive network that enhances communication safety and efficiency. https://community.truecaller.com

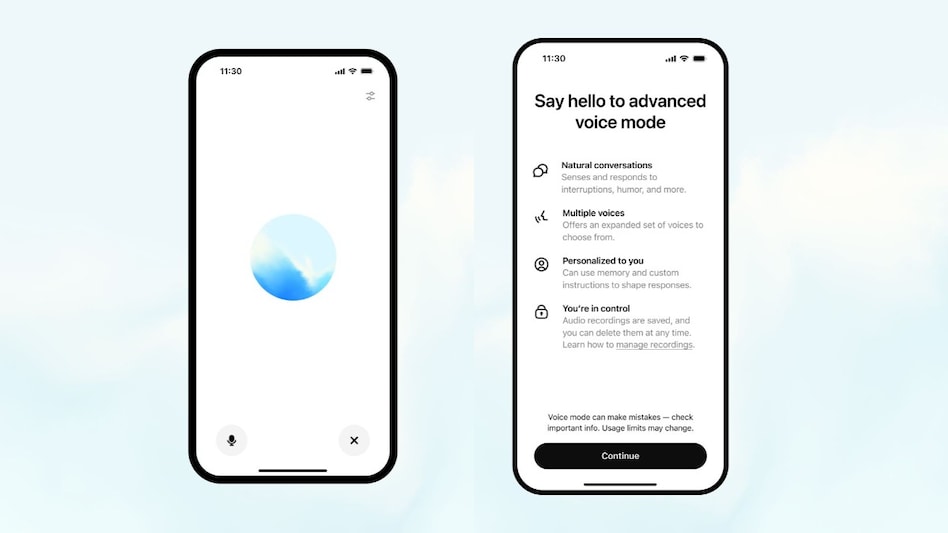

OpenAI “Advanced Voice” is now rolling out to ChatGPT Teams and Plus users

The “Advanced Voice” mode which was demoed by OpenAI a couple of months back, simultaneously coincided with the GPT-40 (omni) model. A day after its release, Scarlett Johannsson, the lead actor who played the role of an AI assistant in the movie “Her” quickly create enough trouble for OpenAI to tone down their release and work out modalities in which they won’t be targeted for any copyright related issue. So, today, with the roll-out, they have announced 5 new voices for the Advanced Voice mode, which is one of the most real-time use cases of ChatGPT ever. One can brainstorm with it, talk to it casually in any way about any topic (conditions apply) and ask it to perform tasks just like how one would do it in text. A lot of people are shocked about its immediacy and the realism in how it handles human voice as a mimic. One user went as far as asking it for help to tune his guitar, and surprisingly it did! This is now in direct competition to the only other live voice chat mode in Gemini Advanced, which does work in a similar way but not exactly as top rated as the ChatGPT implementation.

The “Advanced Voice” mode which was demoed by OpenAI a couple of months back, simultaneously coincided with the GPT-40 (omni) model. A day after its release, Scarlett Johannsson, the lead actor who played the role of an AI assistant in the movie “Her” quickly create enough trouble for OpenAI to tone down their release and work out modalities in which they won’t be targeted for any copyright related issue. So, today, with the roll-out, they have announced 5 new voices for the Advanced Voice mode, which is one of the most real-time use cases of ChatGPT ever. One can brainstorm with it, talk to it casually in any way about any topic (conditions apply) and ask it to perform tasks just like how one would do it in text. A lot of people are shocked about its immediacy and the realism in how it handles human voice as a mimic. One user went as far as asking it for help to tune his guitar, and surprisingly it did! This is now in direct competition to the only other live voice chat mode in Gemini Advanced, which does work in a similar way but not exactly as top rated as the ChatGPT implementation.

OpenAI top exec leaves company amidst rumors of Sam Altman and Jony Ive working on HARDWARE

It’s been a crazy week since the launch of OpenAI’s latest o-1 model with reasoning. Mira Murati, the CTO of OpenAI has just left the company after working for 6 and a half years. Stating that her primary focus will be to work on her own things, she aims to create a smooth transition for whoever will replace her. Replying to her tweet, Sam Altman, the CEO of OpenAI, said he will reveal more about the transition plans soon. At the same time, rumors are swirling around that Sam Altman and Jony Ive, previously head of design at Apple, are secretly working on a hardware startup. The murmurs of hardware and AI coming together have been doing the rounds for a long time, especially since Sam Altman said he wanted his own chip-making company and is willing to invest trillions into it. Midjourney is working on some kind of VR hardware and Perplexity has definitely expressed its intent to make hardware a part of its operations. The smartphone is awaiting a disruption, it seems like, and all signs are pointing towards that. Jony Ive, the famed designer from England who Steve Jobs handpicked for the design of Apple’s iPhone and various other amazingly well designed products, has apparently raised money for a company called LoveFrom, privately, with the help of Ms. Powell Jobs’ company, the Emerson collective etc and has found office space for it. There are apparently about 10 employees working in a 32,000 sq ft LittleFox theatre and their goal is to create a computing experience that is less socially disruptive than the iPhone, powered by AI. Something’s cooking and we are about to find out soon. So many people leaving the now “for-profit” OpenAI has raised a lot of questions about the future path of the company which the CEO, although maintains a guarded silence, keeps indicating that hardware will come.

It’s been a crazy week since the launch of OpenAI’s latest o-1 model with reasoning. Mira Murati, the CTO of OpenAI has just left the company after working for 6 and a half years. Stating that her primary focus will be to work on her own things, she aims to create a smooth transition for whoever will replace her. Replying to her tweet, Sam Altman, the CEO of OpenAI, said he will reveal more about the transition plans soon. At the same time, rumors are swirling around that Sam Altman and Jony Ive, previously head of design at Apple, are secretly working on a hardware startup. The murmurs of hardware and AI coming together have been doing the rounds for a long time, especially since Sam Altman said he wanted his own chip-making company and is willing to invest trillions into it. Midjourney is working on some kind of VR hardware and Perplexity has definitely expressed its intent to make hardware a part of its operations. The smartphone is awaiting a disruption, it seems like, and all signs are pointing towards that. Jony Ive, the famed designer from England who Steve Jobs handpicked for the design of Apple’s iPhone and various other amazingly well designed products, has apparently raised money for a company called LoveFrom, privately, with the help of Ms. Powell Jobs’ company, the Emerson collective etc and has found office space for it. There are apparently about 10 employees working in a 32,000 sq ft LittleFox theatre and their goal is to create a computing experience that is less socially disruptive than the iPhone, powered by AI. Something’s cooking and we are about to find out soon. So many people leaving the now “for-profit” OpenAI has raised a lot of questions about the future path of the company which the CEO, although maintains a guarded silence, keeps indicating that hardware will come.

Meta’s Orion Glasses for true AR is here

Talking of hardware, Mark Zuckerberg has won a lot of praise for NOT releasing to the public, Meta’s first augmented reality glasses called “Orion” at the Meta Connect 2024 event. Earlier codenamed “Project Nazare”, the glasses come in three parts. One is the glass itself, covered by a thick frame full of sensors and compute resources. There are cameras, a custom eye-tracking system, a 70 degree field of view for AR thanks to silicon carbide lens and micro LEDs so small that can be made transparent. There are speakers and batteries as well, and the craziest part is there is a whole other wireless compute puck that actually does all the heavy work, wirelessly, unlike the Apple Vision Pro.

Talking of hardware, Mark Zuckerberg has won a lot of praise for NOT releasing to the public, Meta’s first augmented reality glasses called “Orion” at the Meta Connect 2024 event. Earlier codenamed “Project Nazare”, the glasses come in three parts. One is the glass itself, covered by a thick frame full of sensors and compute resources. There are cameras, a custom eye-tracking system, a 70 degree field of view for AR thanks to silicon carbide lens and micro LEDs so small that can be made transparent. There are speakers and batteries as well, and the craziest part is there is a whole other wireless compute puck that actually does all the heavy work, wirelessly, unlike the Apple Vision Pro.

There is also the neural wrist band which works insanely well for hand gesture identification and hence enables a whole new way of interacting with digitally overlaid elements in AR. A lot of people who tried it with Mark Zuckerberg are positive about the experience and say that this is probably Meta’s first iPhone moment, which the company has worked on for the past 6 years. Zuckerberg revealed at the event that, they are NOT releasing these glasses to commercial channels or to the public and mostly they would want to improve the product first, which once again garnered a lot of positive admiration for the guts, meanwhile Snapchat was panned heavily for launching their own take at AR glasses last week. Read more about it on their blog.

Meta Quest 3S, New AI updates and Llama 3.2, improved Horizon OS

Meta Quest 3S, New AI updates and Llama 3.2, improved Horizon OS

At Connect 2024, Meta has also released its latest take on the VR and Mixed Reality headset, Quest, with the 3S model, stepping in to replace the Quest 2, meanwhile Quest 3 will remain the top end model, with the maximum features available currently for a VR headset. The main changes are in the headset design, its Fresnel lenses, and a slightly narrower field of view. Powered by Snapdragon XR2 processor, the Meta Quest 3s with 128GB of storage starts at just 299$, which aims to keep the momentum for VR going at Meta. Running on the standalone headset is the Horizon OS, which is getting some updates as well, at Connect 2024. This OS was first announced in April of 2024 when it was revealed that other companies can now take advantage of the mixed reality system and build their own hardware with it. For example, ASUS ROG will have a headset running this OS.

Now, with the new AR glasses, Horizon OS has gotten some updates, with multitasking for meta’s apps like Facebook and instagram. A redesign was shown at Connect event, which features a completely overhauled UI yet sporting a simplified interface. The system menu is now called the “navigator”. Horizon Worlds destinations are shown most prominently, and the Library tab now seems to show only the six most recently used apps unless you expand it. For regular users of the Meta headset, this will be understandable. Also newly announced is Llama 3.2, with the highlight being the new 1B and 3B mini models that are capable of running on tiny devices like smartphones. These models are multi-modal and allow the open-source Llama to be used by a wider range of devices in the future. Also new, are tons of AI updates in the multi-modal sphere to Meta AI product that lives inside of Whatsapp, Facebook and Instagram. In all these places, Meta AI will now have voice interactions, meaning it can talk back. Soon it will have support for celebrities – “AI voices of Awkwafina, Dame Judi Dench, John Cena, Keegan Michael Key and Kristen Bell”. Now Meta AI can “see” photos and answer queries about it and even do some edits like quickly changing a background to anything. AI live translation is being tested for a few Instagram creators and influencers where videos will be automatically translated with voice to other languages. Meta’s “imagine me” feature is also being rolled out to imagine yourself into various characters and scenarios, a kind of LoRA-like feature that is mostly popular in GenAI forums. All these updates have been delivered from the Connect 2024 event. Check their blog here for more details.

Iris, a wearable that gives infinite memory

Iris is a new simple AI-based hardware that has been released in a limited way, by enterpreneur “Advait Paliwal”. Its idea is simple. It will take snapshots from its camera in a periodic way.

Iris is a new simple AI-based hardware that has been released in a limited way, by enterpreneur “Advait Paliwal”. Its idea is simple. It will take snapshots from its camera in a periodic way.

It takes a picture every minute, captions and organizes them into a timeline, and uses AI to help you remember forgotten details.

There is also a “focus mode” where it will nudge the user to not get distracted if it finds the user is indeed getting distracted. It’s like a pendant that hangs on to the neck and takes photos. This might be misconstrued as a privacy-killer but in a world where CCTVs are everywhere, is there any privacy in public? Hardly, so in that case, if someone really wants to solve their issue of forgetting everything, this device might be actually of really amazing help. Imagine you are like the hero of Nolan’s Memento. You keep forgetting everything, but thankfully, your polaroid camera shots are always there, on your wall, to remind you of your mission. For such a scenario, certainly this kind of a device will help become a dedicated note-taker.

Hardware

Seeed Studio XIAO ESP32S3 Sense – 2.4GHz Wi-Fi, BLE 5.0, OV2640 camera sensor, digital microphone, 8MB PSRAM, 8MB FLASH, battery charge supported, rich Interface, IoT, embedded ML

The key thing to note here is that this device not only takes just photos, it also captions it with the help of AI which also indulges in data management and answering questions. Check his blog for more details.

India’s supercomputer independence and the Ultraviolette F99

The National Supercomputing Mission (NSM) is a collaboration between MEITY (Ministry of Electronics and IT) and DST (Department of Science and Technology). Their stated goal is to create a network of supercomputers across the country, to aid scientific researchers and other high-performance requirements of scholars and engineers. “PARAM” has already been strategically deployed in various important centers of research, in various names. This week, a new addition finds itself in the news. The PARAM “RUDRA” is a high performance computer that looks like a large server. Its use cases are for extremely complex scenarios. For example, the Giant Metre Radio Telescope (GMRT) in Pune will use PARAM Rudra to study Fast Radio Bursts (FRBs) and other celestial phenomena. The Inter-University Accelerator Centre (IUAC) in Delhi will leverage it for research in advanced materials and atomic interactions. The SN Bose Centre in Kolkata will use the supercomputer to support cutting-edge research in understanding the universe and our planet. These 3 PARAM supercomputers were developed indigenously, according to the official channels.

The National Supercomputing Mission (NSM) is a collaboration between MEITY (Ministry of Electronics and IT) and DST (Department of Science and Technology). Their stated goal is to create a network of supercomputers across the country, to aid scientific researchers and other high-performance requirements of scholars and engineers. “PARAM” has already been strategically deployed in various important centers of research, in various names. This week, a new addition finds itself in the news. The PARAM “RUDRA” is a high performance computer that looks like a large server. Its use cases are for extremely complex scenarios. For example, the Giant Metre Radio Telescope (GMRT) in Pune will use PARAM Rudra to study Fast Radio Bursts (FRBs) and other celestial phenomena. The Inter-University Accelerator Centre (IUAC) in Delhi will leverage it for research in advanced materials and atomic interactions. The SN Bose Centre in Kolkata will use the supercomputer to support cutting-edge research in understanding the universe and our planet. These 3 PARAM supercomputers were developed indigenously, according to the official channels.

Another really good update for India as a technological brand, is the launch of the new Electric Superbike from Ultraviolette – the F99.

Another really good update for India as a technological brand, is the launch of the new Electric Superbike from Ultraviolette – the F99.

The company claims that the F99 is India’s first ever superbike. Its top speed is 265 KM/H, backed by a whopping 120HP/90KW of power from the carefully crafted engines fitted with the company’s own electric motor solutions. 0-100 is just 3 seconds and overall weight of the bike is 178 Kilograms. Since the company was founded by aerospace enthusiasts, a lot of focus has been given to aerodynamics and carbon fibre bodywork. Of course, if you know the company, you must know already that they have their own AI called “Violette AI” which constantly monitors and lets you even adjust the performance parameters of the bike and handles security features as well. The aviation DNA and high-tech ambitions are making waves in the industry where not many competitors exist. Ultraviolette’s F99 is a bold step from the Indian startup which aims to put a mark on the world. Check out their website to learn more about this bike.

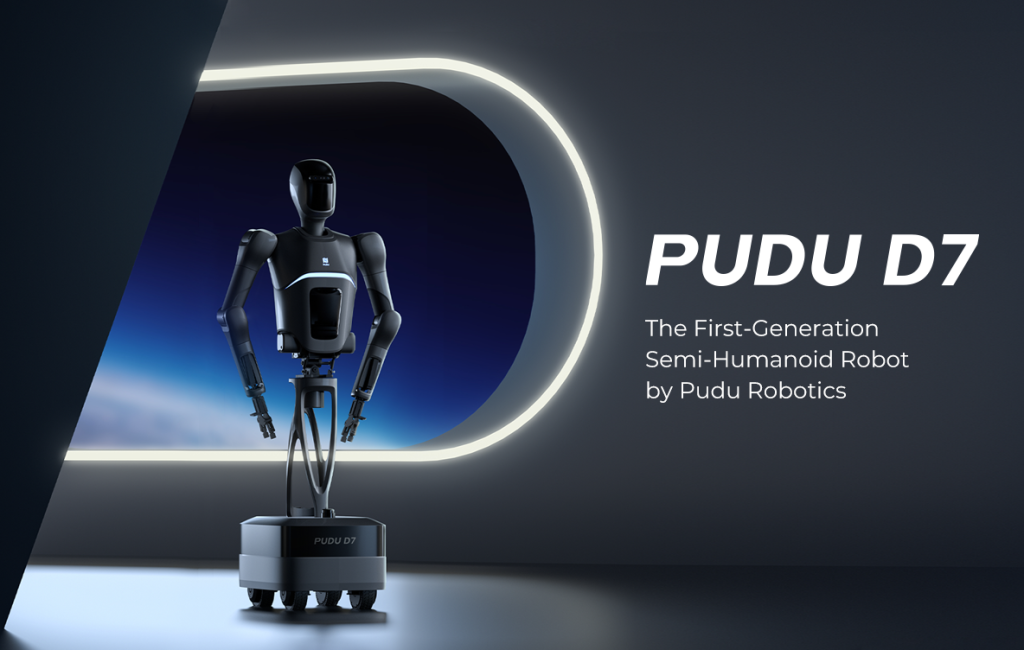

Chinese bot maker “Pudu” unveils first semi-humanoid “D7”

China is not taking a break. Robotics seems to be one of the foremost efforts of many of their leading start up companies that are working on humanoids, like Unitree, or in the case of “Pudu”, it’s a semi-humanoid named D7. As you can see from the picture, this is their first generation product. The focus is on the upper body functions, like dexterity of arms and shoulders, using which an omnidirectional robot can handle complex tasks in industrial settings. This is quite a tall robot, standing at 165cm in height yet weighs just 45 Kgs. It can operate continuously for 8 hours, with its capacity exceeding 1KWh. Its bionic arms can lift 10 Kgs of load and has end end-point precision of 0.1mm. Which means, the robot can execute its arm movement with startling accuracy. The intelligence system has a layered approach of “high level” and “low level” planning which can be taken advantage of, with instructions from humans.

China is not taking a break. Robotics seems to be one of the foremost efforts of many of their leading start up companies that are working on humanoids, like Unitree, or in the case of “Pudu”, it’s a semi-humanoid named D7. As you can see from the picture, this is their first generation product. The focus is on the upper body functions, like dexterity of arms and shoulders, using which an omnidirectional robot can handle complex tasks in industrial settings. This is quite a tall robot, standing at 165cm in height yet weighs just 45 Kgs. It can operate continuously for 8 hours, with its capacity exceeding 1KWh. Its bionic arms can lift 10 Kgs of load and has end end-point precision of 0.1mm. Which means, the robot can execute its arm movement with startling accuracy. The intelligence system has a layered approach of “high level” and “low level” planning which can be taken advantage of, with instructions from humans.

“We are excited to introduce the PUDU D7, which highlights our commitment to continuous technological and product innovation. Featuring advanced mobility, versatile operational capabilities, and embodied intelligence, this semi-humanoid robot marks a significant milestone in our pursuit of a diverse range of robotic solutions,” said Felix Zhang, Founder and CEO of Pudu Robotics. “By advancing a comprehensive ecosystem of specialized robots, semi-humanoid robots, and humanoid robots, we aim to shape the future of the service robotics industry and deliver exceptional value across various applications.”

Once again, we are just in awe at the rate of speed in which the robotics companies are moving towards making humanoids or semi-humanoids for use in service, especially in dangerous industrial and factory environments. This trend will continue to play a big role in the edge of tomorrow.