Are you ready to see what the “edge of tomorrow” looks like this week? In our ongoing series, now in its 8th week, we continue to bring you the latest breakthroughs that are redefining technology and the world around us. From Tesla’s autonomous Robotaxi and humanoid robots to Meta’s bold moves in generative video, and MIT’s glimpse into your future self, we’re covering the stories that matter most in the rapidly evolving world of consumer-facing tech. Join us as we explore these exciting innovations—each one pushing the boundaries of what was thought possible.

Are you ready to see what the “edge of tomorrow” looks like this week? In our ongoing series, now in its 8th week, we continue to bring you the latest breakthroughs that are redefining technology and the world around us. From Tesla’s autonomous Robotaxi and humanoid robots to Meta’s bold moves in generative video, and MIT’s glimpse into your future self, we’re covering the stories that matter most in the rapidly evolving world of consumer-facing tech. Join us as we explore these exciting innovations—each one pushing the boundaries of what was thought possible.

This article is brought to you in partnership with Truetalks Community by Truecaller, a dynamic, interactive network that enhances communication safety and efficiency. https://community.truecaller.com

Tesla’s Bold Leap with Robotaxi, Robovan, and Optimus Robots

Tesla’s Bold Leap with Robotaxi, Robovan, and Optimus Robots

The recent Tesla event, titled “We Robot,” showcased a bold leap into the future of autonomous and humanoid technology. The event featured several live demonstrations and provided a detailed look at Tesla’s advancements in robotics and autonomous transportation. It was a high-profile showcase that aimed to highlight the company’s progress in AI and automation, and included various activities such as live presentations, interactive displays, and real-time demonstrations of Tesla’s technologies. The centerpieces were the Tesla Robotaxi, Robovan, and the Optimus robots. A tele-operated army of Optimus robots was on display, walking across the set, engaging in a coordinated dance sequence inside a glass enclosure, and even serving drinks to attendees. The Optimus robots demonstrated improved coordination and the use of advanced AI for movement, making it a major milestone in the development of practical humanoid robots. These robots were operated remotely, demonstrating Tesla’s innovations in robotics combined with its AI-driven teleoperation system.

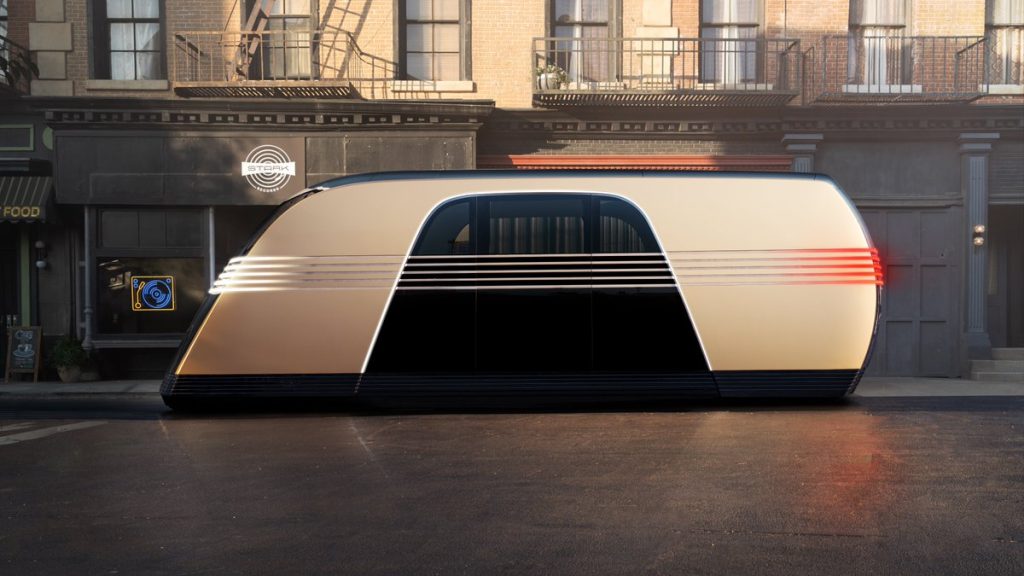

The Tesla Robotaxi and Robovan, both designed for fully autonomous operation, were also unveiled during the event. The Robotaxi is intended for urban mobility, with sleek designs and features that Tesla claims will dramatically reduce the cost per mile compared to current transportation options. Meanwhile, the Robovan is focused on providing a larger, versatile autonomous vehicle suited for both passengers and deliveries. These vehicles represent Tesla’s next step towards a future where human-driven cars are gradually phased out, and shared, autonomous transportation becomes the norm.

The Tesla Robotaxi and Robovan, both designed for fully autonomous operation, were also unveiled during the event. The Robotaxi is intended for urban mobility, with sleek designs and features that Tesla claims will dramatically reduce the cost per mile compared to current transportation options. Meanwhile, the Robovan is focused on providing a larger, versatile autonomous vehicle suited for both passengers and deliveries. These vehicles represent Tesla’s next step towards a future where human-driven cars are gradually phased out, and shared, autonomous transportation becomes the norm.

In addition to the robots and vehicles, the event featured a spectacular drone show, illuminating the sky in a carefully choreographed display of lights and symbols, further highlighting Tesla’s vision of merging advanced technology with artistry. This event underscored Tesla’s commitment to making sophisticated robotics and autonomous solutions a part of everyday life, pushing the boundaries of what technology can achieve in the consumer space. The sense of optimism for the future was palpable, as the company presented a clear picture of how AI, robotics, and automation could reshape human lives for the better.

In addition to the robots and vehicles, the event featured a spectacular drone show, illuminating the sky in a carefully choreographed display of lights and symbols, further highlighting Tesla’s vision of merging advanced technology with artistry. This event underscored Tesla’s commitment to making sophisticated robotics and autonomous solutions a part of everyday life, pushing the boundaries of what technology can achieve in the consumer space. The sense of optimism for the future was palpable, as the company presented a clear picture of how AI, robotics, and automation could reshape human lives for the better.

Bret Taylor’s New Venture: Sierra and the Future of Conversational AI

Bret Taylor’s New Venture: Sierra and the Future of Conversational AI

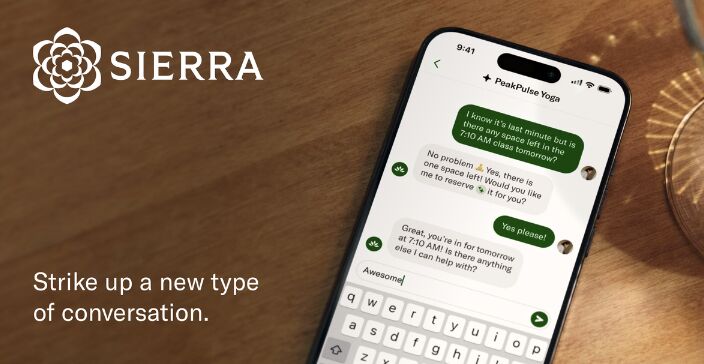

Bret Taylor, an influential name in technology and innovation, is once again in the spotlight with his new venture, Sierra. Taylor’s credentials are nothing short of impressive: he has served as Chairman of OpenAI, former CTO of Meta, CEO of Salesforce, and co-creator of Google Maps. Taylor’s extensive background in both tech development and leadership has put him at the forefront of AI and consumer-facing technologies, and his new startup aims to revolutionize customer interaction with artificial intelligence.

Sierra has been launched with a focus on creating voice-capable AI agents that can hold natural conversations with customers, while having deep contextual understanding of the specific services they represent. The product, called “Sierra Speaks,” is designed to serve as a virtual representative, answering phone calls and interacting in a personalized, intuitive way. Co-founded with Clay Bavor, a former Google executive, Sierra has made significant strides in integrating voice recognition and AI to make these interactions as seamless and human-like as possible. The company emphasizes that people are highly attuned to nuances in voice and conversational flow, and Sierra’s technology is designed to meet those high expectations.

Sierra’s launch has already garnered significant attention, and the company is reportedly seeking to secure additional funding at a valuation of over $4 billion. The product, which has been compared favorably to similar solutions in the market, promises to transform customer service by making it more efficient and accessible. As more companies look for innovative ways to enhance customer experience, Sierra’s approach to combining advanced AI with real-world applicability sets it apart in the growing field of conversational AI. This move underscores Bret Taylor’s ongoing impact on the tech world, blending visionary AI solutions with practical, user-focused outcomes.

Meta’s MovieGen: Generative AI Takes Video to the Next Level

Meta’s MovieGen: Generative AI Takes Video to the Next Level

Meta has announced a groundbreaking addition to its AI product lineup: MovieGen, a generative AI system capable of creating full-length video content. Built on Meta’s Media Foundation Models (MFMs), MovieGen uses a hybrid architecture that combines advanced generative modeling and retrieval-based techniques to deliver video content that is not only visually stunning but also contextually rich and adaptable to different user inputs. MovieGen represents a major leap beyond Meta’s existing image generation capabilities, promising to usher in a new era of AI-driven storytelling. While MovieGen is not yet available for public use, the examples presented at its unveiling are nothing short of breathtaking. The generated videos showcase a wide range of themes, from cinematic short films to dynamic animations, all created with minimal input from the user. This highlights the system’s capability to understand both high-level narrative concepts and intricate visual details. The technology demonstrates Meta’s ambition to lead in the generative video space, building upon the innovations seen in similar initiatives like OpenAI’s Sora, which also remains under wraps for now.

One of the most intriguing aspects of MovieGen is its planned integration with Instagram, Meta’s popular social platform. This integration hints that the technology will soon be accessible to a vast audience, potentially revolutionizing how video content is created and shared. Meta has emphasized that MovieGen uses sophisticated AI models capable of understanding and generating complex visual narratives, precise video editing, and audio generation, making it ideal for creative professionals, content creators, and everyday users who wish to produce high-quality, engaging videos. MovieGen also leverages a modular approach, allowing users to interactively guide the generation process by specifying desired elements such as scene composition, visual style, and even character attributes. The bold move to bring MovieGen to Instagram signals Meta’s intent to democratize video creation, making cutting-edge generative AI accessible to a global user base.

While Meta is taking significant steps, competition in the generative video space is heating up. Chinese players like Minimax Hailuo are already offering user access, and American startups like Dreamlabs, Runway, and Pika—recently updated—are also making waves with their own generative video products. This announcement places Meta firmly at the forefront of generative AI research and application, showcasing the company’s commitment to pushing boundaries and exploring new frontiers in digital creativity. The excitement surrounding MovieGen is palpable, as it hints at the near future where anyone can generate visually compelling stories with just a few prompts, dramatically transforming the landscape of digital media creation.

While Meta is taking significant steps, competition in the generative video space is heating up. Chinese players like Minimax Hailuo are already offering user access, and American startups like Dreamlabs, Runway, and Pika—recently updated—are also making waves with their own generative video products. This announcement places Meta firmly at the forefront of generative AI research and application, showcasing the company’s commitment to pushing boundaries and exploring new frontiers in digital creativity. The excitement surrounding MovieGen is palpable, as it hints at the near future where anyone can generate visually compelling stories with just a few prompts, dramatically transforming the landscape of digital media creation.

HeyGen’s Avatar 3.0: The Next Evolution in Digital Avatars

HeyGen’s Avatar 3.0: The Next Evolution in Digital Avatars

HeyGen, a prominent company in the field of AI-driven digital avatars, has established itself as a leader in enabling users to create realistic and engaging virtual representations of themselves. The company’s products are widely known for their capabilities in generating avatars that can mimic facial expressions, gestures, and even voice, allowing for dynamic and lifelike digital interactions. With a focus on accessibility and user experience, HeyGen’s technology has found applications across social media, marketing, and virtual events, helping users to connect in novel and immersive ways.

The latest update from HeyGen, Avatar 3.0, pushes the boundaries of what digital avatars can achieve. This update introduces a significant enhancement in the naturalness of avatar animations, with improved lip-syncing and facial expression mapping, powered by the latest AI models for video synthesis. Additionally, Avatar 3.0 integrates multilingual support, allowing avatars to communicate fluently in multiple languages with accurate lip-sync for each. This update also includes enhanced customization options, giving users more control over the appearance and behavior of their avatars, from subtle facial expressions to full-body animations. The technical advancements in Avatar 3.0 make it a compelling choice for users seeking a more immersive and expressive digital experience.

HeyGen’s Avatar 3.0 has numerous applications. In content creation, it can produce marketing, educational, or social media videos without needing on-camera talent, opening up creative possibilities for individuals and businesses alike. Customer interaction can become more engaging through personalized virtual assistants, making interactions feel more human. The gaming and VR sectors stand to gain significantly, as these lifelike avatars can boost immersion and make virtual experiences more realistic and dynamic. The versatility of Avatar 3.0 holds exciting potential for the future of digital communication and entertainment, where virtual avatars can transform how we interact in digital spaces.

MIT’s Future You: Talking to Your Future Self

MIT’s Future You: Talking to Your Future Self

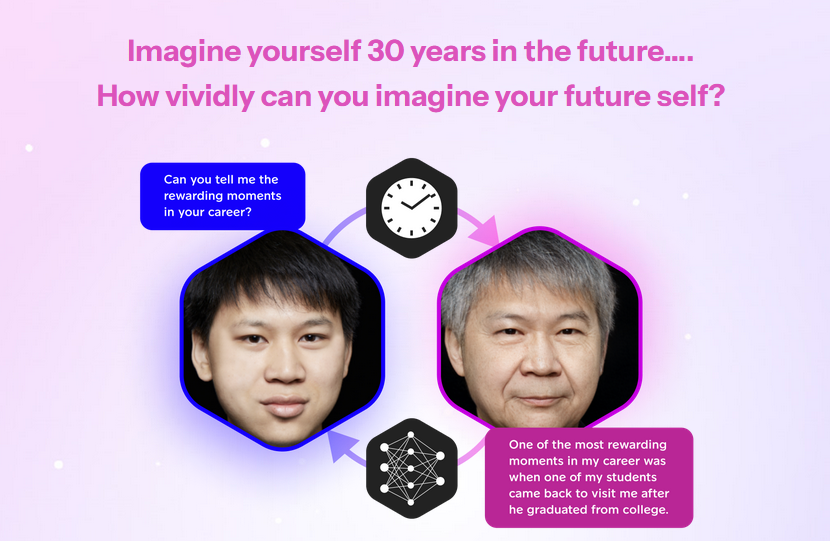

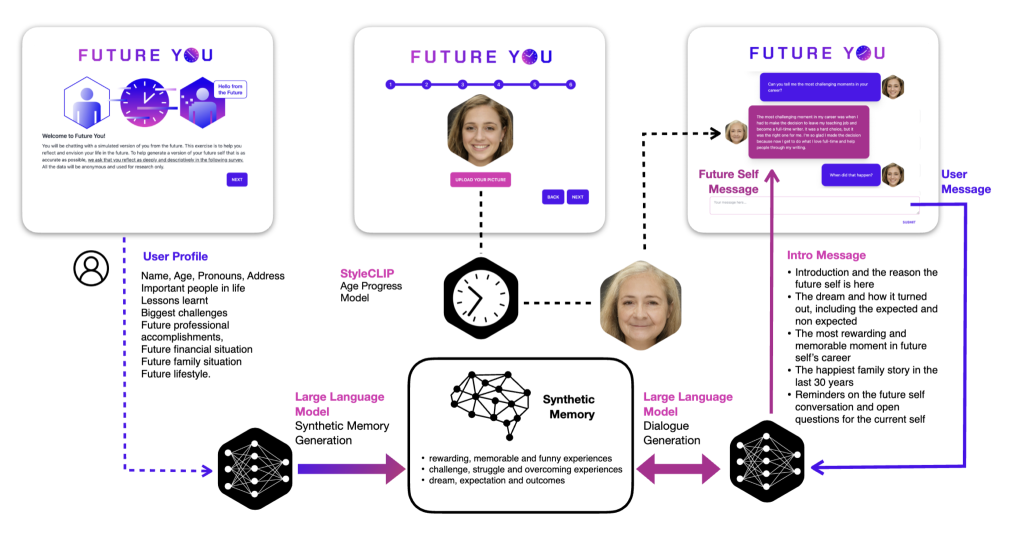

MIT’s latest project, “Future You,” is an AI chatbot that allows users to interact with a simulated version of their future selves. Developed by MIT’s Media Lab, Future You aims to provide an introspective experience by creating a dialogue between the present user and their projected future personality. Using advanced natural language processing, the chatbot simulates responses that align with the user’s aspirations and fears, offering a glimpse into how current decisions might shape their future selves. The concept is designed to help people better understand the impact of their actions and decisions, encouraging deeper self-reflection and long-term thinking.

Future You can be used in a variety of ways, from helping users set long-term goals to providing motivational support for life changes. The project aims to make self-reflection more interactive and insightful, using AI to simulate a future version of the user who can provide perspective on their choices today. Technically, Future You relies on advanced natural language models trained to predict potential future responses based on user-provided data, including current habits, preferences, and goals. The system leverages machine learning algorithms that incorporate user data over time to refine the accuracy of these projections. This architecture allows for nuanced and context-rich simulations, making interactions feel genuinely personal. The chatbot was created to explore how technology can influence self-perception and decision-making, emphasizing the importance of foresight and empathy towards one’s future self. The system leverages data provided by users to generate meaningful, context-rich responses that feel authentic, bridging the gap between the present and the imagined future.

This concept has sparked discussions about its similarity to science fiction portrayals, such as the “Black Mirror” episode where a character interacts with an AI version of her deceased boyfriend. Projects like Future You underscore the inevitable direction of AI chatbots as they evolve from simple assistants to more self-reflective, memory-driven entities. By allowing users to talk to a version of themselves from the future, MIT’s project taps into both the allure and the ethical complexities of AI’s role in personal introspection, hinting at a future where technology is not only a tool for productivity but also a means for deep emotional and psychological exploration. Future You represents a significant step forward in the way we use AI to understand ourselves, providing a new perspective on how current choices can shape our future lives.

This concept has sparked discussions about its similarity to science fiction portrayals, such as the “Black Mirror” episode where a character interacts with an AI version of her deceased boyfriend. Projects like Future You underscore the inevitable direction of AI chatbots as they evolve from simple assistants to more self-reflective, memory-driven entities. By allowing users to talk to a version of themselves from the future, MIT’s project taps into both the allure and the ethical complexities of AI’s role in personal introspection, hinting at a future where technology is not only a tool for productivity but also a means for deep emotional and psychological exploration. Future You represents a significant step forward in the way we use AI to understand ourselves, providing a new perspective on how current choices can shape our future lives.

Liquid Foundation Models: The Next Generation of AI Architecture

Liquid Foundation Models: The Next Generation of AI Architecture

Liquid AI recently unveiled its latest innovation: Liquid Foundation Models. These models are designed to be highly adaptive and fluid AI systems that differ from traditional static models. Unlike existing foundation models, which are typically trained once and deployed, Liquid Foundation Models are capable of dynamically adjusting their internal parameters based on incoming data and evolving contexts. This capability allows them to better adapt to changes in real-time, making them more resilient and versatile in a variety of applications.

Technically, Liquid Foundation Models use a combination of neural plasticity and recurrent memory architectures to facilitate continuous learning and improvement. The models come in various parameter sizes, ranging from lightweight versions suitable for edge deployments to larger models designed for more computationally intensive tasks, with sizes scaling up to hundreds of billions of parameters. This architecture contrasts with traditional Transformer-based models like GPT, which are trained in a single phase and do not adapt post-deployment. Liquid Foundation Models incorporate built-in mechanisms for context-awareness and incremental adaptation, allowing them to adjust without needing to undergo full retraining, addressing the rigidity often seen in existing Transformer models.

Experts have identified both potential strengths and challenges for Liquid Foundation Models. On the positive side, the ability to continuously adapt gives these models an edge in dynamic environments, such as real-time decision-making in finance or adaptive customer service. However, challenges related to computational overhead and stability during incremental learning remain significant concerns. Ensuring that these models do not compromise their accuracy while adapting to new data is a complex problem that has yet to be fully solved. Some experts have compared Liquid Foundation Models to earlier attempts at creating adaptive AI, which struggled with scalability and stability issues. Whether Liquid Foundation Models can overcome these obstacles remains to be seen, but the technology offers an exciting advancement in the pursuit of more adaptive and intelligent AI systems.

This week’s exploration of the latest technology brought us Tesla’s vision of autonomous mobility, Meta’s dive into generative video, and MIT’s introspective AI—all highlighting the incredible pace at which consumer technology is advancing. From conversational AI to adaptive avatars, and from future self-reflection to fluid AI models, it’s clear that innovation is pushing the boundaries of what we thought possible. Stay tuned as we continue to track these developments—next week promises even more fascinating insights into the edge of tomorrow. Don’t miss it!

This week’s exploration of the latest technology brought us Tesla’s vision of autonomous mobility, Meta’s dive into generative video, and MIT’s introspective AI—all highlighting the incredible pace at which consumer technology is advancing. From conversational AI to adaptive avatars, and from future self-reflection to fluid AI models, it’s clear that innovation is pushing the boundaries of what we thought possible. Stay tuned as we continue to track these developments—next week promises even more fascinating insights into the edge of tomorrow. Don’t miss it!