With AI becoming integrated into everyday use, many people are exploring the possibility of running Large Language Models (LLMs) locally on their laptops or desktops. For this purpose, many are using LM Studio, a popular software, based on the llama.cpp project, which has no dependencies and can be accelerated using only the CPU.

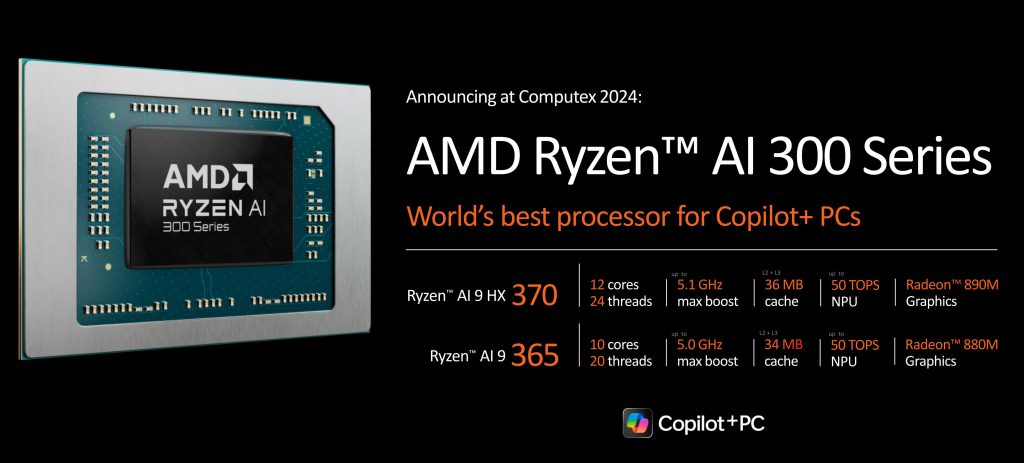

Capitalizing on the popularity of LM Studio, AMD showcased performance gains for LLMs using its latest Ryzen AI processors. With the new AMD Ryzen AI 300 Series processors, the company promises state-of-the-art workload acceleration and industry-leading performance in llama.cpp-based applications like LM Studio for x86 laptops.

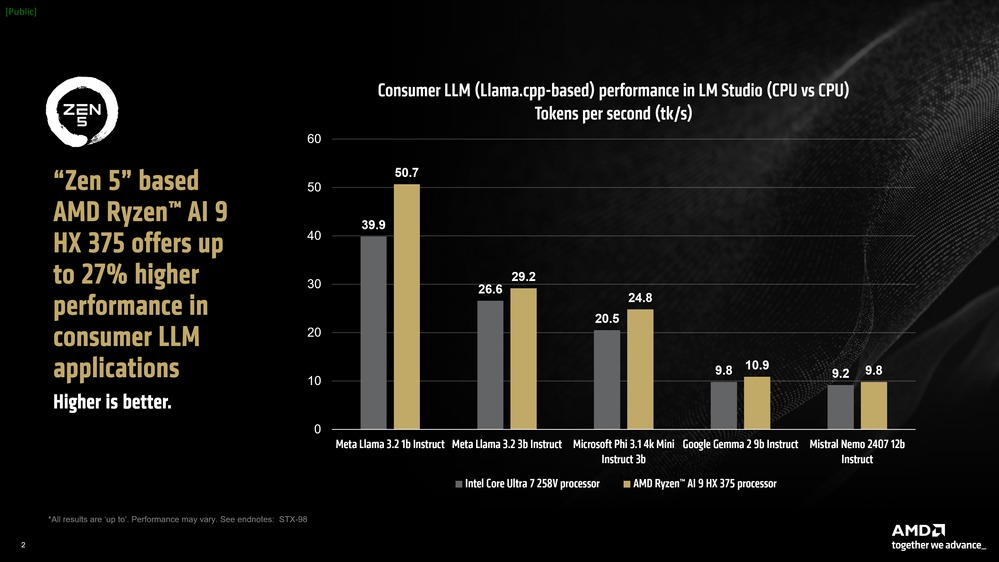

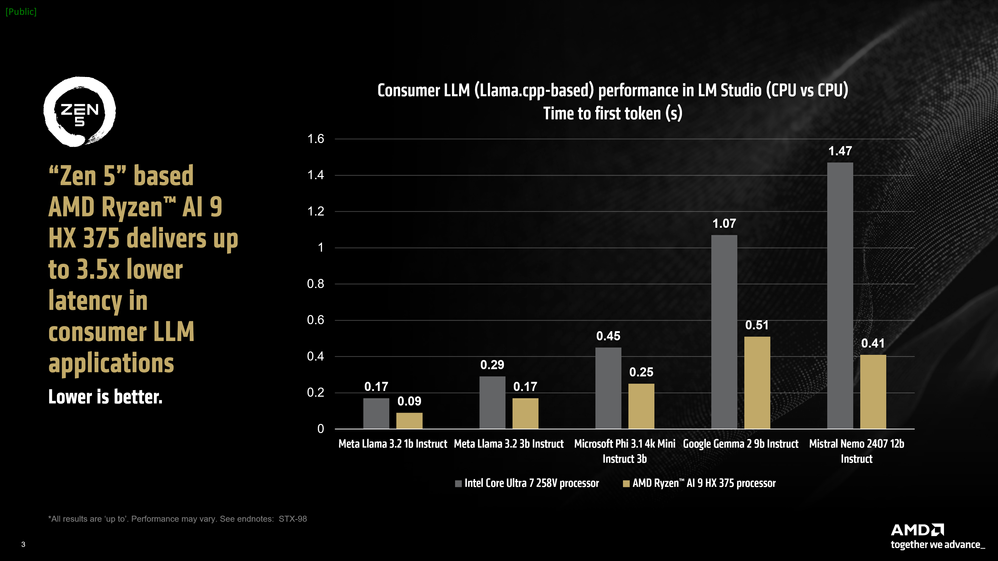

Performance Comparisons:

For the tokens per second (tk/s) metric, which denotes how quickly an LLM can output tokens (response), Intel Core Ultra 7 and Ryzen AI 9 HX 375 processors were compared. AMD achieves up to 27% faster performance in this comparison. Further, AMD also outperformed the competitor by 3.5x time in time to the first token benchmark.

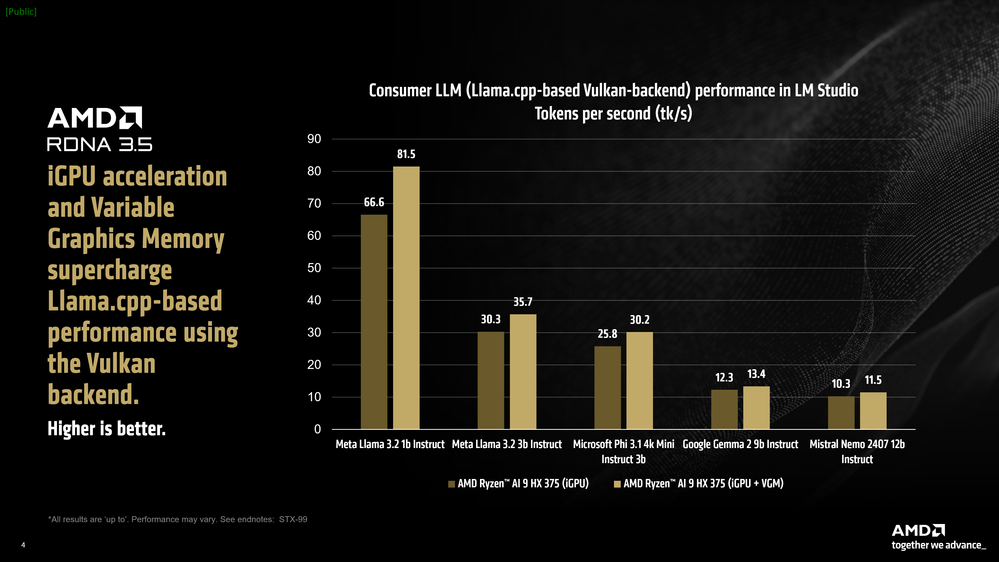

AMD Ryzen AI processors utilize AMD XDNA 2 architecture-based NPUs for persistent AI tasks like Copilot+ workloads, while relying on the iGPU for on-demand AI tasks. The Ryzen AI 300 Series processors also include a feature called Variable Graphics Memory (VGM), which will utilize the 512 MB block of dedicated allocation for an iGPU plus the second block of memory that is housed in the “shared” portion of system RAM.

This combination of iGPU acceleration and VGM offers a significant average performance increase on consumer LLMs based on vendor-agnostic Vulkan API. After turning on VGM (16GB), we saw a further 22% average uplift in performance in Meta Llama 3.2 1b Instruct for a net total of 60% average faster speeds, compared to the CPU, using iGPU acceleration when combined with VGM, said the company.

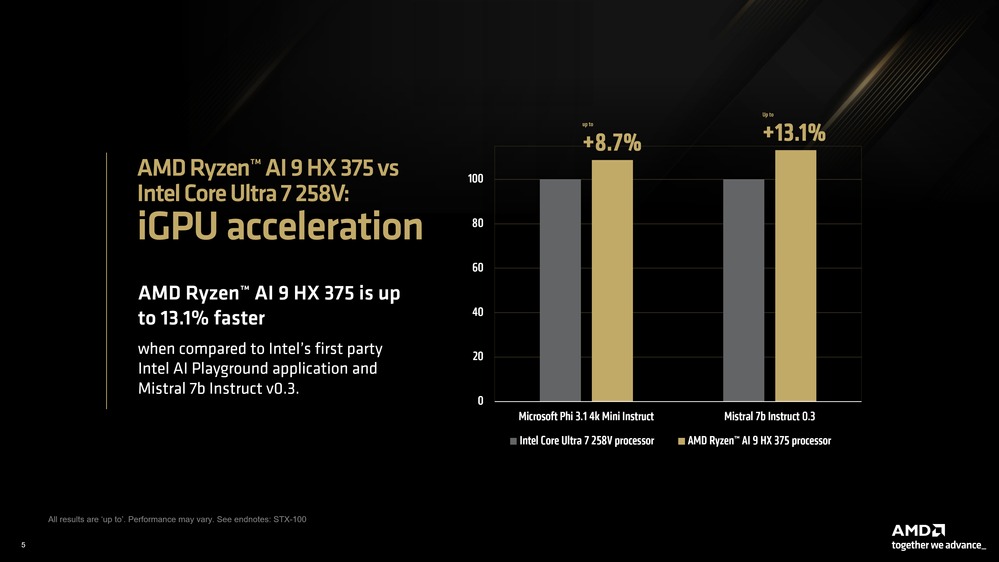

AMD also outshined Intel’s iGPU performance which used the first-party Intel AI Playground application, showing 8.7% faster performance with Microsoft Phi 3.1 Instruct and 13% faster in Mistral 7b Instruct 0.3.

Regarding the matter, AMD says,

AMD believes in advancing the AI frontier and making AI accessible for everyone. This cannot happen if the latest AI advances are gated behind a very high barrier of technical or coding skill – which is why applications like LM Studio are so important. Apart from being a quick and painless way to deploy LLMs locally, these applications allow users to experience state-of-the-art models pretty much as soon as they launch (assuming the llama.cpp project supports the architecture).

AMD Ryzen AI accelerators offer incredible performance and turning on features like Variable Graphics Memory can offer even better performance for AI use cases. All of this combines to deliver an incredible user experience for language models on an x86 laptop.