Imagine coding tools smart enough to understand your unique development needs, regardless of platform, and enterprises securing AI models while drawing from live web data. Picture robots moving with human-like grace, mastering complex tasks at home and work. This week, we explore the tech shaping our future: GitHub CoPilot expands to multiple models, from OpenAI to Google; Google Vertex AI protects private data while pushing performance; Clone Robotics crafts lifelike robotic limbs; Apple integrates privacy-focused AI into its ecosystem; Recraft AI reimagines generative design; OpenAI’s ChatGPT now pulls live web info; Autodesk bridges video and 3D interaction; and NVIDIA’s Spectrum-X partners with xAI for transparent AI. Ready for the future? Let’s dive in.

Imagine coding tools smart enough to understand your unique development needs, regardless of platform, and enterprises securing AI models while drawing from live web data. Picture robots moving with human-like grace, mastering complex tasks at home and work. This week, we explore the tech shaping our future: GitHub CoPilot expands to multiple models, from OpenAI to Google; Google Vertex AI protects private data while pushing performance; Clone Robotics crafts lifelike robotic limbs; Apple integrates privacy-focused AI into its ecosystem; Recraft AI reimagines generative design; OpenAI’s ChatGPT now pulls live web info; Autodesk bridges video and 3D interaction; and NVIDIA’s Spectrum-X partners with xAI for transparent AI. Ready for the future? Let’s dive in.

This article is brought to you in partnership with Truetalks Community by Truecaller, a dynamic, interactive network that enhances communication safety and efficiency. https://community.truecaller.com

GitHub’s AI Revolution: CoPilot and Spark Redefine Coding

GitHub’s AI Revolution: CoPilot and Spark Redefine Coding

GitHub, the popular code hosting platform that was acquired by Microsoft in 2018, has been leveraging the CoPilot AI toolset for a few years now, where large language models that were specifically fine-tuned for coding were integrated into the workflow. Initially, the models were all from OpenAI because of the partnership, but now, a commitment to openness has resulted in integration of multiple models from Anthropic’s Claude 3.5 Sonnet to Google’s Gemini 1.5 Pro, alongside OpenAI’s o-1 preview and o-1 mini. The “multi-model” CoPilot is truly one of its kind, marking the first time a Microsoft product incorporates AI models from competing tech giants like Google and Anthropic, which have demonstrated strong capabilities in the domain of programming.

This evolution of GitHub CoPilot comes with an emphasis on providing developers with choices and bringing multiple AI capabilities under one roof. GitHub’s move not only expands its AI offerings but also positions CoPilot as a highly versatile tool, capable of meeting different developer preferences. The integration of these various AI models empowers developers to leverage unique strengths from different AI ecosystems, allowing for better suggestions, more efficient coding, and a streamlined development experience. By integrating models like Google’s Gemini and Anthropic’s Claude, GitHub demonstrates a shift towards providing developers with the best AI tools available, regardless of the originating company.

Furthermore, GitHub has also introduced “GitHub Spark,” a fully AI-native tool that facilitates building applications simply by conversing with CoPilot. This move is a direct challenge to existing offerings like Cursor and Replit Agent, both of which had gained significant traction in previous weeks. The coding community surely knows that Microsoft is late to this party. But that will not stop the huge community of developers who are already familiar with the GitHub experience to try it out, and may be even invite a lot of new coders who have never coded before. The landscape of AI-driven tools is surely going to look a lot different next year.

Grounded AI on Vertex: Google’s “Custom AI” offering

Grounded AI on Vertex: Google’s “Custom AI” offering

Google Cloud’s Vertex AI platform has introduced an advanced feature called Grounding, designed to cater to enterprises needing both cutting-edge AI and stringent data security. Grounding allows developers to tie AI models to private datasets, resulting in generative AI outputs that are deeply relevant to specific use cases without risking sensitive data exposure. Unlike many public AI models that are trained on vast, open datasets, Vertex AI’s Grounding feature ensures that data stays secure and outputs are uniquely tailored. Moreover, with the addition of integration capabilities like the Gemini API, companies can take advantage of Google’s extensive search capabilities to enhance the AI’s understanding of context.

The Gemini API integration allows Vertex AI to access up-to-date information directly from Google Search, giving grounded AI models access to live, real-world data that helps generate more precise and relevant responses. The key advantage here is that while companies are using their own proprietary data, they also benefit from the latest and most reliable information available on the web. This hybrid approach ensures that AI models not only understand internal datasets but also contextualize them with broader, real-time information, improving the depth and quality of outputs. This is a significant differentiator compared to other generative AI models that may rely solely on static or outdated training datasets.

Additionally, Vertex AI Grounding’s AI Studio offers enterprises a hands-on approach to building, testing, and deploying customized AI models with enhanced privacy controls. AI Studio enables organizations to experiment with different levels of model customization and quickly implement grounding mechanisms for their specific needs. This contrasts sharply with the “one-size-fits-all” nature of many public AI models. AI Studio, combined with grounding and the Google Search integration, gives businesses a highly flexible AI platform that not only respects privacy but also maximizes the usability and impact of generative AI. As data security continues to be a critical issue, Google’s push with Vertex AI Grounding stands out as a solution that some very important customers would like to have. Very soon, large orgs will have their own datasets examined by their own models, which is an exciting future to look forward. It will be very interesting to see how other AI companies react to this product.

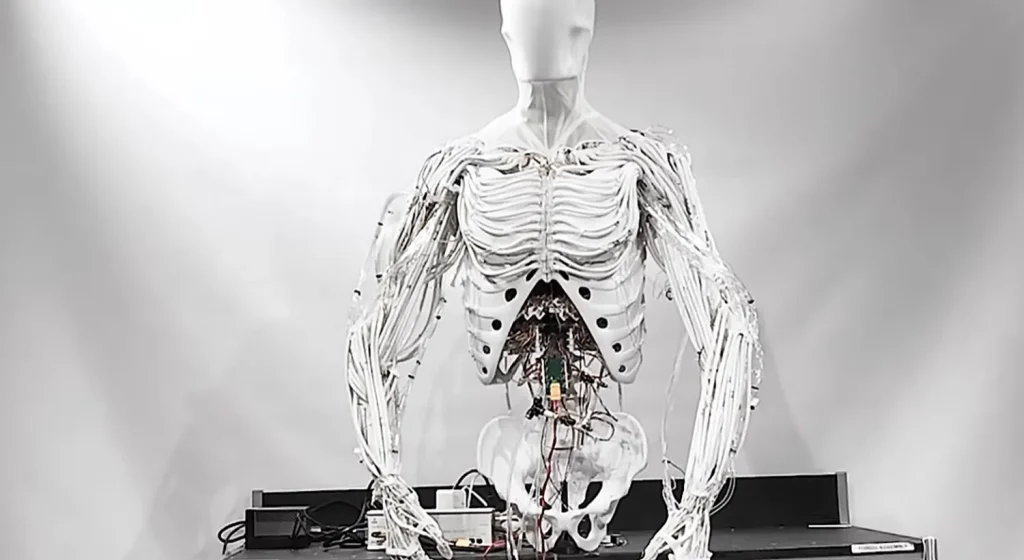

The Uncanny Arrival: Clone Robotics’ Bimanual Musculoskeletal Torso

The Uncanny Arrival: Clone Robotics’ Bimanual Musculoskeletal Torso

Clone Robotics is making waves in the world of robotics with the release of their bimanual musculoskeletal torso, a highly advanced robot that closely mimics human movements and dexterity. Unlike typical robots with simple, mechanical joints, Clone’s torso uses a network of artificial muscles that closely resemble human physiology. This allows the robot to perform highly detailed and nuanced tasks, such as picking up delicate objects or handling tools in a more human-like manner. The design is meant to be a significant leap forward in human-robot interaction, paving the way for more sophisticated, lifelike robotic assistants.

The key differentiator of Clone Robotics’ bimanual torso is its musculoskeletal structure, which allows for realistic bimanual coordination—meaning the two arms can work together seamlessly, much like a human’s would. This is a huge step forward compared to most industrial robots, which tend to operate in a rigid, pre-programmed manner. The bimanual design of Clone’s torso means that it can interact with its environment in a way that is much closer to how a person would, opening up possibilities for use in tasks that require a delicate touch, such as caregiving, medical assistance, or intricate craftsmanship. Additionally, the robot’s use of AI models for processing sensory input means it can adapt its actions in real time, a feature that makes it far more flexible compared to traditional robotic arms.

Another fascinating aspect of Clone’s torso is its ability to be easily reprogrammed for different tasks. Unlike many public-facing robots that are built for one specific function, Clone Robotics has designed their torso to be adaptable, which means it could be used in a wide variety of settings—from home environments, where it could help with household chores, to industrial scenarios that require complex manipulation of objects. Its musculoskeletal design is not just for show; it brings a level of fluidity and adaptability to robotic movement that makes it feel far less mechanical and much more natural. While some may find its lifelike qualities uncanny, the potential applications of such an advanced system in both everyday settings and specialized industries make it a remarkable addition to the future of robotics. With its innovative design, Clone Robotics is setting a new benchmark in the development of humanoid robotics, and it will be exciting to see how these technologies evolve.

Apple’s Intelligent Future: AI Comes to iPhone, iPad, and Mac

Apple’s Intelligent Future: AI Comes to iPhone, iPad, and Mac

Apple has recently announced the introduction of its new AI platform, Apple Intelligence, which is now available on iPhone, iPad, and Mac devices. Apple Intelligence aims to bring a seamless AI experience to users by integrating various generative AI capabilities into their everyday workflow. Unlike many standalone AI tools, Apple Intelligence is fully embedded within the Apple ecosystem, providing users with smarter features across apps like Messages, Mail, and Photos. This level of integration means that AI can assist users intuitively, making it easier to draft emails, edit images, or automate repetitive tasks—all without leaving the apps they’re accustomed to using.

What sets Apple Intelligence apart is its strong emphasis on privacy. Apple’s AI models process data directly on the device whenever possible, ensuring that personal information stays under the user’s control. This on-device approach contrasts sharply with the cloud-based AI models from other tech giants, which often require internet connectivity and may involve sending sensitive data to remote servers. By handling AI tasks locally, Apple not only offers a fast and responsive user experience but also builds on its promise of maintaining user privacy. This approach has particular appeal for those who are concerned about data security and prefer keeping their personal information private.

Another standout feature is the use of Neural Engine, Apple’s specialized hardware for machine learning, which plays a key role in delivering these AI capabilities efficiently across devices. The Neural Engine is designed to accelerate the processing of AI tasks, making complex features such as real-time language translation or intelligent photo editing faster and more energy-efficient. Additionally, with the introduction of AI Studio for developers, Apple is encouraging third-party app creators to integrate these AI capabilities into their own apps, further extending the reach of Apple Intelligence. This move not only empowers developers but also positions Apple Intelligence as a leader in seamlessly integrating AI into both everyday use and professional workflows.

Recraft AI’s Innovative Step Forward: AI Model Thinking in Design Language

Recraft AI’s Innovative Step Forward: AI Model Thinking in Design Language

Recraft AI has introduced an AI model that “thinks” in design language to improve generative design by understanding creative concepts better. Unlike models driven solely by technical prompts, Recraft’s AI considers aspects like aesthetics, balance, and context, making it useful for graphic designers, product designers, and 3D modelers. This model can generate assets like 3D environments, textures, and UI elements that align closely with human creative intent while maintaining text consistency and optimal length throughout the generated outputs. They claim that this is the only model in the world that can do long sentences of text and still maintain consistency.

The model features Design CoPilot, an interactive tool for refining designs through conversational feedback. Users can iteratively adjust aspects such as lighting or proportions, with real-time responses from the AI. This collaboration is especially beneficial for rapid prototyping and allows for seamless refinement of creative ideas. Design Templates are also available, offering pre-configured styles like minimalist web interfaces or modern architecture, providing a starting point that can be customized to specific needs.

By integrating design language into its generative process, Recraft AI helps streamline creative tasks while supporting innovation across various fields. Its mix of customization, real-time interaction, and design templates makes high-quality design more accessible, making it easier for both professionals and enthusiasts to achieve cohesive, polished results efficiently.

ChatGPT Search: OpenAI’s New Way to Blend AI and Web Data

ChatGPT Search: OpenAI’s New Way to Blend AI and Web Data

OpenAI has recently introduced a new feature called ChatGPT Search, designed to combine the generative power of ChatGPT with up-to-date information from the web. Unlike the standard model, which relies on pre-existing data, ChatGPT Search integrates real-time web data to generate answers that reflect the most current information available. This capability brings a new level of utility to ChatGPT, enabling it to answer questions about recent events, trends, or niche topics that are not included in its training data.

The primary advantage of ChatGPT Search is its ability to stay relevant by drawing from the vast resources of the internet while still leveraging the conversational prowess of ChatGPT. Users can ask questions about ongoing news stories, emerging technologies, or even niche topics and get answers that incorporate the latest insights. For instance, someone planning a trip can inquire about current flight restrictions or local events, and ChatGPT will provide timely and contextually relevant responses by searching the web in real time.

This integration also introduces a layer of transparency, as users can see where the information is sourced from. By blending the generative AI capabilities of ChatGPT with the vast and dynamic knowledge of the web, OpenAI is enhancing the depth, accuracy, and reliability of the responses provided. This hybrid approach offers a powerful tool for anyone seeking a combination of conversational assistance and live information, whether for professional research, travel planning, or staying informed on current affairs. It marks an important evolution in the way AI can be used to interact with and make sense of the ever-changing world.

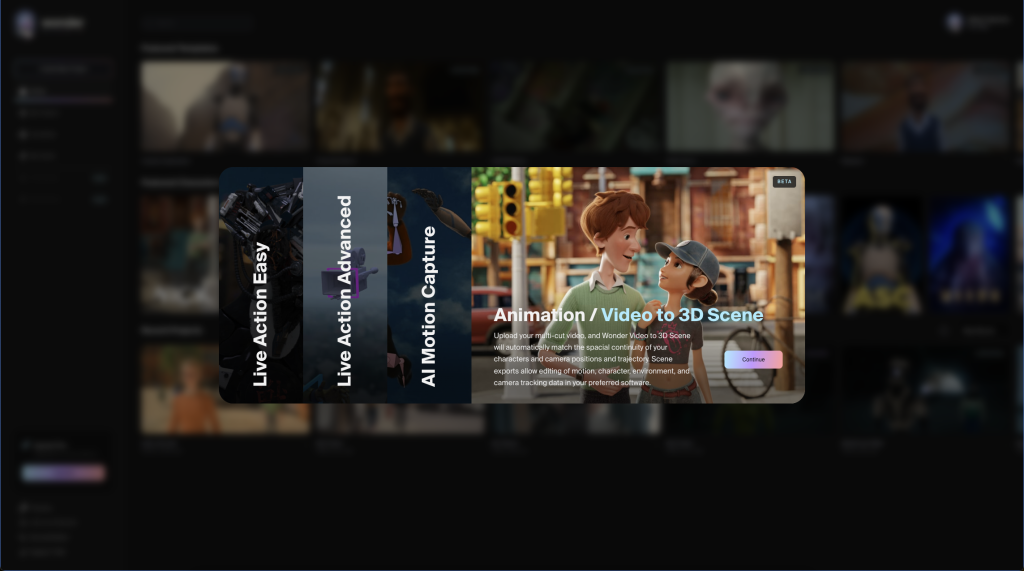

Autodesk’s Wonder: From Video to 3D Scene Technology

Autodesk’s Wonder: From Video to 3D Scene Technology

Autodesk has unveiled Wonder, a new technology that allows users to transform video footage into interactive 3D scenes. This innovation is aimed at animators, game developers, and creative professionals who want to quickly convert real-world motion into detailed 3D environments. Wonder leverages advanced AI algorithms, including deep learning-based object tracking and motion analysis, to analyze video frames and create corresponding 3D representations, drastically reducing the time and effort required for traditional animation techniques.

The key advantage of Wonder is its ability to streamline the production process by automating the conversion of real-world footage into 3D scenes. Traditionally, creating 3D animations involves a labor-intensive process of manual modeling and rigging, but Wonder’s AI capabilities significantly cut down on that workload. Animators can now capture real-world motion, such as a dancer’s performance or an outdoor landscape, and instantly convert it into a manipulatable 3D scene. Game developers, for instance, can use Wonder to create realistic environments, like bustling cityscapes or natural settings, directly from video footage. Additionally, architects can use Wonder to visualize building layouts in real-world contexts, speeding up the conceptual design process.

Wonder also supports integration with existing Autodesk tools like Maya and 3ds Max, allowing users to further refine and edit the generated 3D scenes within familiar software. Users can leverage features like procedural modeling and texture mapping in 3ds Max to enhance the generated scenes. This interoperability ensures that creative professionals can easily incorporate Wonder into their existing workflows without needing to learn entirely new tools. By bridging the gap between real-world footage and digital animation, Autodesk is pushing the boundaries of what is possible in the fields of animation and game development, making high-quality 3D content creation more accessible, efficient, and technically robust for various professional use cases.

NVIDIA’s Spectrum-X: The Backbone of Grok

NVIDIA’s Spectrum-X: The Backbone of Grok

NVIDIA has introduced Spectrum-X, a new Ethernet networking solution designed to address the growing demands of generative AI and large-scale AI workloads. Spectrum-X integrates advanced networking hardware with NVIDIA’s in-house software to create a powerful, scalable networking platform capable of supporting the massive data flow required by cutting-edge AI applications. Unlike traditional Ethernet solutions, Spectrum-X is optimized specifically for the needs of AI workloads, offering unprecedented levels of performance and reliability.

The key component of Spectrum-X is the NVIDIA Spectrum-4 Ethernet switch, which delivers high-throughput, low-latency networking with support for up to 51.2 terabits per second of switching capacity. This switch is complemented by NVIDIA’s BlueField-3 data processing unit (DPU), which offloads networking, storage, and security tasks from the CPU, freeing up resources for AI computation. This combination allows for highly efficient data transfer between nodes in a data center, reducing bottlenecks and improving the overall performance of AI models during training and inference.

Colossus, the world’s largest AI supercomputer, is being used to train xAI’s Grok family of large language models, with chatbots offered as a feature for X Premium subscribers. xAI is in the process of doubling the size of Colossus to a combined total of 200,000 NVIDIA Hopper GPUs.

A notable aspect of Spectrum-X is its collaboration with xAI, Elon Musk’s AI research organization, to build a high-performance and transparent AI infrastructure. This collaboration aims to create an environment where data transparency and efficient AI training can coexist, making Spectrum-X an essential component in xAI’s Grok AI models.

Actually there is a lot more than this from the week’s updates but we have curated it tightly to manage for better reading. Anyways, most of the important ones are covered and as we look ahead, we must ask ourselves: How will the boundaries between AI, robotics, and human creativity blur further? What new tools will emerge to enhance our ability to interact seamlessly with technology? And most importantly, which innovations will redefine our daily lives next? The future is filled with questions and possibilities, and as we continue exploring the edge of tomorrow, one thing is certain—there are even more surprising developments waiting for us just around the corner. Stay tuned as we dive into next week’s journey, uncovering the next wave of transformative technology.