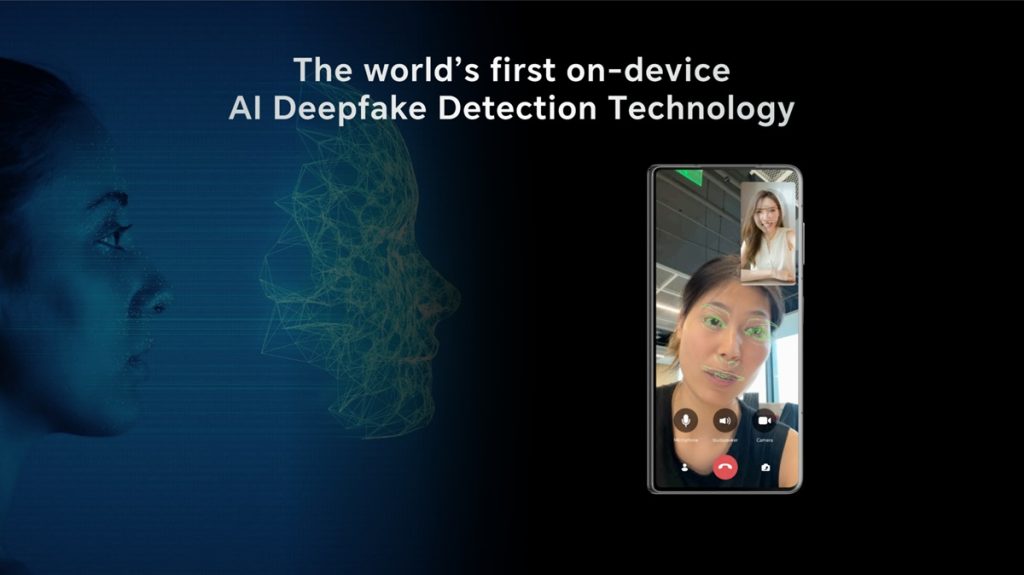

HONOR today announced that its AI Deepfake Detection technology will be available worldwide starting April 2025. This groundbreaking feature helps users identify deepfake content in real-time, offering immediate alerts against manipulated images and videos for a safer digital experience.

How AI Deepfake Detection Works

First introduced at IFA 2024, HONOR’s proprietary AI Deepfake Detection technology analyzes media for subtle inconsistencies, such as:

- Pixel-level synthetic imperfections

- Border compositing artifacts

- Inter-frame continuity issues

- Facial anomalies like face-to-ear ratio, hairstyle, and feature inconsistencies

Upon detecting manipulated content, users receive instant warnings, allowing them to make informed decisions and avoid digital deception, HONOR confirmed.

The Growing Deepfake Threat

Artificial intelligence has transformed industries but has also amplified cyber threats, including deepfake attacks. A report from the Entrust Cybersecurity Institute revealed that in 2024, a deepfake attack occurred every five minutes.

Additionally, Deloitte’s 2024 Connected Consumer Study found that 59% of users struggle to differentiate between human-created and AI-generated content. With 84% of AI users demanding content labeling, HONOR recognizes the need for advanced detection tools and industry collaboration to enhance digital security.

Organizations like the Content Provenance and Authenticity (C2PA) are already working on verification standards for AI-generated media.

AI Deepfake Risks in Business and Cybersecurity

Deepfake attacks are rising, with 49% of companies facing both audio and video deepfakes between November 2023 and November 2024, marking a 244% increase in digital forgeries. Despite this, 61% of executives have yet to implement defense protocols. Common risks include:

- Fraud and Identity Theft – Criminals use deepfakes to impersonate executives, leading to financial scams and data breaches.

- Corporate Espionage – Fake media can manipulate markets, spread misinformation, and damage brand reputation.

- Misinformation and Public Manipulation – AI-generated content can mislead customers and stakeholders.

Countermeasures Against Deepfakes

The tech industry is ramping up efforts to combat deepfakes through:

- AI-Powered Detection Tools – Companies like HONOR leverage AI to analyze eye contact, lighting, image clarity, and playback inconsistencies.

- Industry Collaborations – Organizations like C2PA, founded by Adobe, Microsoft, and Intel, are working on content authentication standards.

- On-Device Deepfake Protection – Qualcomm’s Snapdragon X Elite enables real-time AI-powered detection on mobile devices without compromising privacy.

The Future of Deepfake Security

The global deepfake detection market is expected to grow 42% annually, reaching $15.7 billion by 2026. As AI-generated content becomes more sophisticated, businesses must invest in AI-driven security solutions, regulatory frameworks, and employee awareness programs to mitigate deepfake-related threats.

UNIDO Highlights Deepfake Detection for Safety

Marco Kamiya, a representative from the United Nations Industrial Development Organization (UNIDO) noted that as we rely more on technology, our personal details—like locations, finances, relationships, and preferences—are stored online.

If this information leaks, it could lead to spam, scams, or even threats to our safety and property. He stressed that strong privacy protection is essential for peace of mind and confidence in the digital age.

He pointed out that mobile phones, central to daily life, hold a huge amount of private data. From calls and texts to payments and photos, they track everything—contacts, videos, even passwords. This makes them a key focus for security.

With that in mind Kamiya said,

AI Deepfake Detection on mobile devices is a vital tool against deepfakes. It spots flaws—like odd eye contact, lighting, clarity, or playback—that people might miss, reducing risks. This helps individuals, small businesses, and industries use digital tools safely, free from worries about privacy breaches or fraud.

HONOR emphasized that adopting AI detection technology is essential in safeguarding operations, consumers, and reputations in an era where “seeing is no longer believing.”