Google on Tuesday introduced Gemini 2.5, its most intelligent AI model yet. The first release, an experimental version called 2.5 Pro, debuted at number one on the LMArena leaderboard “by a significant margin,” said Koray Kavukcuoglu, CTO of Google DeepMind.

He noted that these “thinking models” reason through their thoughts before responding, improving performance and accuracy.

Key Features of Gemini 2.5 Pro

Strong Reasoning Skills

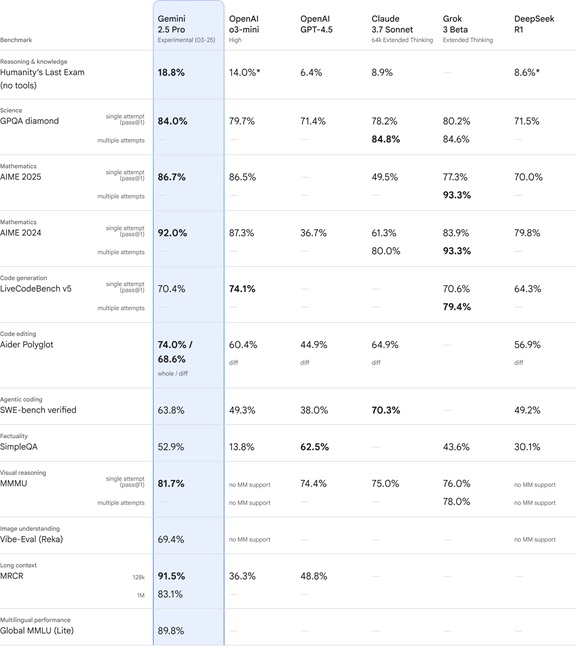

Gemini 2.5 Pro, described as the “most advanced model for complex tasks,” leads benchmarks like GPQA and AIME 2025 without extra techniques such as majority voting. It scores a state-of-the-art 18.8% on Humanity’s Last Exam, a dataset crafted by experts to push knowledge and reasoning limits, and excels in math and science.

Top-Notch Coding Abilities

Kavukcuoglu said they’ve achieved “a big leap over 2.0” in coding, with more improvements planned. The 2.5 Pro scores 63.8% on SWE-Bench Verified, an industry-standard test, using a custom agent setup. It creates “visually compelling web apps,” handles code transformation, and can generate executable video game code from a single prompt.

Building on Gemini Strengths

Gemini 2.5 retains native multimodality and launches with a 1 million token context window, set to reach 2 million soon. It processes text, audio, images, video, and entire code repositories effectively. Kavukcuoglu emphasized that feedback will help enhance its abilities rapidly, with the goal of “making our AI more helpful.”

Availability and Access

Developers can test 2.5 Pro in Google AI Studio now, and Gemini Advanced users can select it from the model dropdown on desktop and mobile. It will arrive on Vertex AI in the coming weeks, with pricing for “higher rate limits for scaled production use” to follow, he added.

Now, with Gemini 2.5, we’ve achieved a new level of performance by combining a significantly enhanced base model with improved post-training. Going forward, we’re building these thinking capabilities directly into all of our models, so they can handle more complex problems and support even more capable, context-aware agents.