OpenAI has announced o3 and o4-mini, its most advanced reasoning models yet, bringing improvements in tool use, multimodal reasoning, performance, and safety. Both models are now available in ChatGPT and through API access.

“These are the smartest models we’ve released to date,” OpenAI said, describing them as a leap in capability for “everyone from curious users to advanced researchers.”

Key Improvements and Features

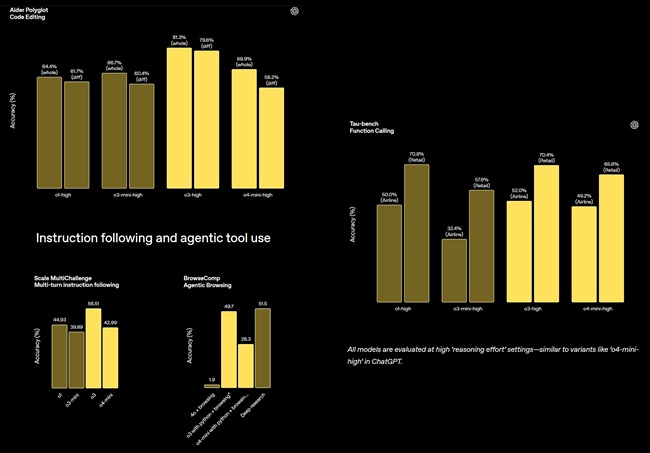

OpenAI noted that o3 and o4-mini are designed to reason before responding, combining tool access—like web search, code generation, file analysis, and image creation—into a unified system that produces detailed, thoughtful answers in under a minute.

The models mark progress toward what OpenAI called a more “agentic ChatGPT,” capable of independently using tools to solve complex, multi-step tasks.

OpenAI o3: Most Advanced Reasoning Model

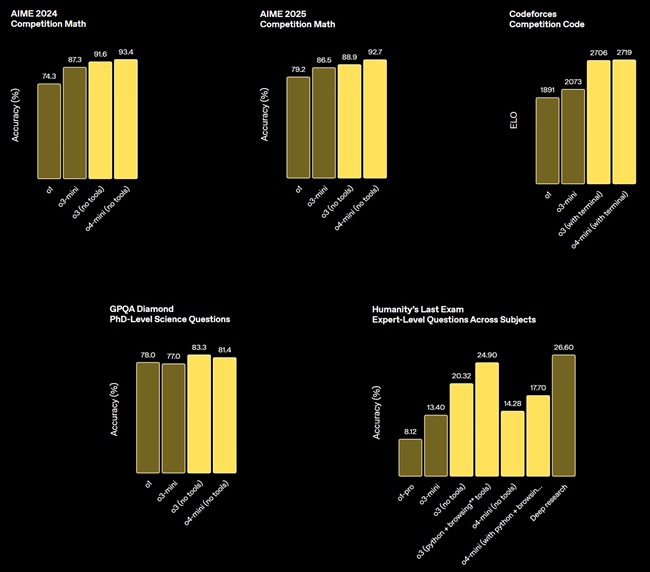

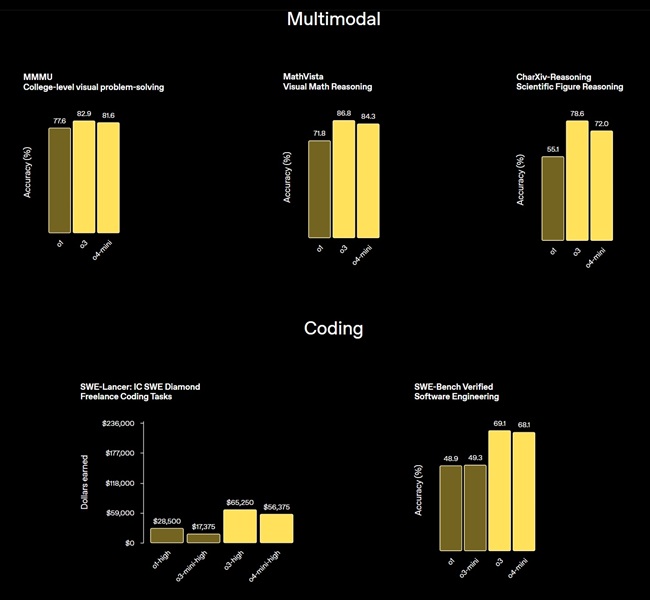

o3 is OpenAI’s most capable reasoning model, pushing the frontier in areas like coding, science, math, and visual analysis. It sets new state-of-the-art (SOTA) scores across benchmarks such as:

- Codeforces

- SWE-bench (without custom scaffolding)

- MMMU

External expert reviews found o3 makes 20% fewer major errors than o1, particularly in real-world tasks across domains like programming, business, and creative ideation. It performed especially well in visual reasoning, and early testers noted its strength in evaluating hypotheses in biology, math, and engineering.

OpenAI o4-mini: Cost-Efficient, High-Throughput Reasoning

The o4-mini model is designed for fast, cost-efficient reasoning, with strong performance in math, coding, and visual tasks. It outperforms o3-mini and is the top benchmarked model on AIME 2024 and 2025.

According to expert evaluation, o4-mini also leads in non-STEM areas like data science and benefits from higher usage limits, making it suitable for high-throughput scenarios.

OpenAI said both models show improved instruction following, better use of web sources, and generate more natural, personalized conversations by referencing memory and past interactions.

Advanced Tool Use and Reasoning

For the first time, the models can combine tools inside ChatGPT—including:

- Web search

- Code interpreter (Python)

- File analysis

- Image generation

They can reason when and how to use tools effectively. For example, in response to a question like “How will summer energy usage in California compare to last year?”, the model can:

- Search for utility data

- Write Python code

- Generate a forecast chart

- Explain the prediction

This flexible, multi-step approach enables reasoning with up-to-date data, synthesis across formats, and rich, visual answers.

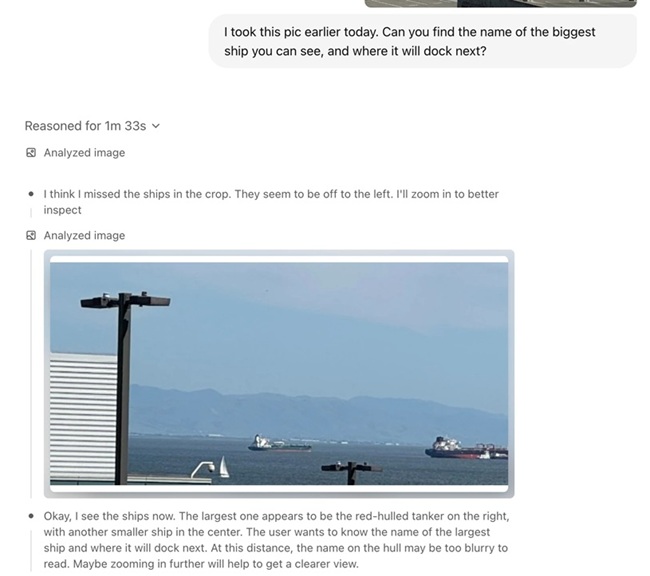

Thinking with Images

OpenAI highlighted a major step forward: the models now “think with images”—they can interpret blurry photos, diagrams, and hand-drawn sketches as part of their reasoning chain. They can also manipulate images dynamically (rotate, zoom, etc.) to support their thought process.

This improves performance across multimodal benchmarks, making previously unsolvable problems accessible.

Efficient and Cost-Effective

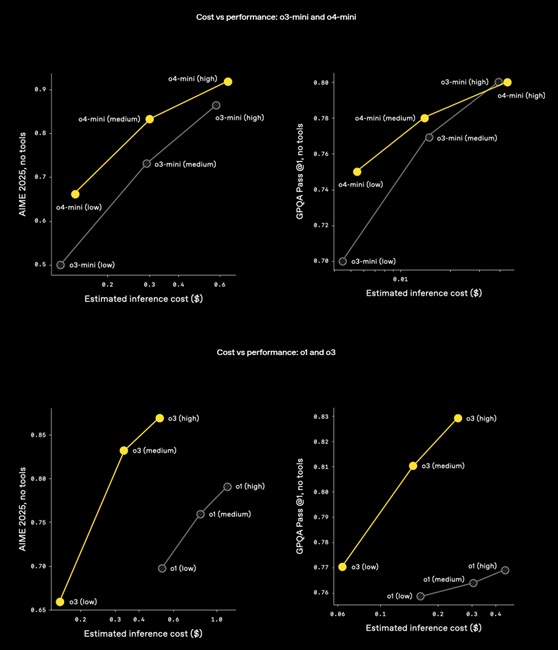

The company says both o3 and o4-mini outperform and out-cost their predecessors:

- o3 improves cost-performance over o1

- o4-mini improves over o3-mini

OpenAI expects these models to be smarter and more efficient in most real-world usage.

Reinforcement Learning and Scaling

OpenAI shared that training o3 followed the same “more compute = better performance” trend seen in GPT-series pretraining. By scaling reinforcement learning (RL), they achieved a new level of inference-time reasoning. Letting o3 “think longer” boosts its performance further, OpenAI added.

The models were also trained to reason not just about how to use tools, but when to use them, which enhances their performance in open-ended and visual workflows.

Safety Improvements

OpenAI rebuilt its safety training data, adding refusal prompts in sensitive areas like:

- Biological threats (biorisk)

- Malware

- Jailbreaks

o3 and o4-mini performed strongly on OpenAI’s internal refusal benchmarks. A reasoning LLM monitor, trained using human-written safety specs, flagged ~99% of biorisk conversations during red-teaming, according to the company.

Both models were tested under OpenAI’s Preparedness Framework across:

- Biological/chemical threats

- Cybersecurity

- AI self-improvement

Results placed both below the “High” risk threshold in all categories.

Codex CLI: Reasoning in the Terminal

OpenAI also announced Codex CLI, a new experiment allowing users to run reasoning models like o3 and o4-mini from the terminal. It supports multimodal input (e.g., screenshots or sketches) and direct local code access.

Codex CLI is open source at github.com/openai/codex, and OpenAI is launching a $1 million grant program, with support in $25,000 API credits for projects using it.

Availability and Access

From April 16, 2025, o3 and o4-mini (including o4-mini-high) are available to:

- ChatGPT Plus, Pro, and Team users (replacing o1, o3-mini, and o3-mini-high)

- ChatGPT Enterprise and Edu users (within a week)

Free users can try o4-mini by selecting “Think” before submitting prompts. Usage limits remain unchanged.

Developers can access both models via:

- Chat Completions API

- Responses API (includes summaries and soon built-in tools like web/file search)

OpenAI plans to release o3-pro with full tool support in the coming weeks. For now, Pro users can continue using o1-pro.

Looking Ahead

OpenAI said future models aim to combine o-series reasoning with GPT-series conversational flow and proactive tool use. “By unifying these strengths,” the company added, “our future models will support seamless, natural conversations alongside advanced problem-solving.”