Google today introduced Gemma 3, a series of advanced, lightweight open models developed using the same research behind its Gemini 2.0 models. Clement Farabet, VP of Research at Google DeepMind, described them as “our most advanced, portable, and responsibly developed open models yet.”

Model Sizes and Performance

Designed to operate efficiently across devices—from phones to workstations—Gemma 3 empowers developers to create AI applications suited for various needs.

Gemma 3 comes in four sizes—1B, 4B, 12B, and 27B parameters—giving developers flexibility to choose models that align with their hardware and speed requirements.

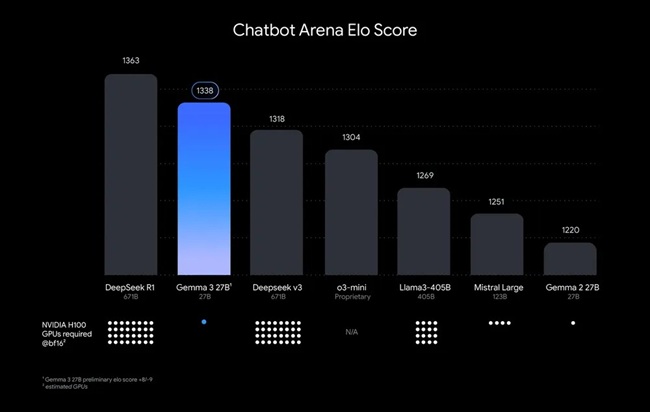

Google emphasized that the models outperform larger counterparts like Llama-405B, DeepSeek-V3, and o3-mini in initial human preference tests conducted on LMArena’s leaderboard. This makes them ideal for single-GPU or TPU setups.

Key Features of Gemma 3

- Global Language Support: Pretrained for over 140 languages, with immediate support for 35 languages.

- Large Context Window: A 128k-token capacity enables handling of complex tasks and large datasets.

- Advanced Text and Visual Reasoning: Processes text, images, and short videos with superior analytical skills.

- Function Calling: Allows automation and structured outputs for dynamic workflows.

- Optimized Speed: Quantized versions reduce computational costs while maintaining high accuracy.

Safety and Responsibility

Tris Warkentin, Director at Google DeepMind, highlighted the importance of rigorous safety measures, stating, “We believe open models require careful risk assessment, and our approach balances innovation with safety—tailoring testing intensity to model capabilities.”

Gemma 3 underwent thorough safety evaluations, including misuse testing in STEM-related scenarios, to ensure low-risk operation. Warkentin noted that as AI evolves, Google is committed to refining its risk-proportionate safety practices.

ShieldGemma 2: A Safety Companion

Google also introduced ShieldGemma 2, a 4B-parameter image safety checker built on Gemma 3’s framework. ShieldGemma 2 labels content across three categories—dangerous, sexually explicit, and violent. Developers can further customize its features to fit user-specific safety needs, promoting responsible AI usage.

Seamless Integration for Developers

Gemma 3 seamlessly integrates with popular platforms and tools, offering developers the flexibility to:

- Use Compatible Tools: Supports Hugging Face Transformers, JAX, Keras, PyTorch, Google AI Edge, and Gemma.cpp.

- Start Quickly: Access models instantly via Google AI Studio or download from platforms like Hugging Face and Kaggle.

- Customize Easily: Fine-tune the model with Google Colab, Vertex AI, or other setups, including gaming GPUs.

- Deploy Efficiently: Choose deployment options like Vertex AI, Cloud Run, Google GenAI API, or local systems. NVIDIA has optimized Gemma 3 for GPUs of all sizes, from Jetson Nano to Blackwell chips, with integration available through the NVIDIA API Catalog. The models are also optimized for Google Cloud TPUs and AMD GPUs via ROCm.

The Gemmaverse: Fostering Innovation

Google’s “Gemmaverse” is a vibrant ecosystem of community models and tools based on Gemma. Examples include AI Singapore’s SEA-LION v3, which bridges language gaps in Southeast Asia; INSAIT’s BgGPT, Bulgaria’s first large language model; and Nexa AI’s OmniAudio, showcasing Gemma’s potential for on-device audio processing.

To further support innovation, Google launched the Gemma 3 Academic Program, offering $10,000 in Google Cloud credits to researchers. Applications opened today, March 12, 2025, and will remain open for four weeks.

Warkentin said, “The Gemma family of open models is foundational to our commitment to making useful AI technology accessible,” adding that over 100 million downloads and 60,000 variants created within a year underscore Gemma’s growing impact.

How to Get Started

Gemma 3 is available now, offering various ways for developers to explore and integrate the models:

- Try it instantly in Google AI Studio with no setup.

- Download from Hugging Face, Ollama, or Kaggle for customization.

- Fine-tune and adapt with Hugging Face Transformers or other preferred tools.

- Deploy via Cloud Run, NVIDIA NIMs, or local environments.

This launch demonstrates Google’s dedication to democratizing access to AI, blending innovation, safety, and adaptability to empower developers worldwide.